September 29, 2009

Dreamtime

Robin has written a very interesting sum up/conclusion to a series of blogs about far future economics: This is the Dream Time.

Robin has written a very interesting sum up/conclusion to a series of blogs about far future economics: This is the Dream Time.

His basic argument is that in the long run replicators will replicate to fill their ecological/economical niche. Since the amount of "stuff" that we can get is limited by at most polynomial expansion across the cosmos (and reproduction can be exponential), our descendants will be under strong pressure to be frugal, probably existing on a subsistence level.

At the same time, if we expand outwards lightspeed limitations makes it hard to communicate with every part of the metacivilization. This would make it hard to achieve the strong cultural control to keep eager replicators from replicating fast, as well as cultural or species homogeneity. He thinks they would experience lives worth living.

His conclusion is that we are living in an unique era where we have broken free from the Malthusian constraints of the past, and not yet hit the constraints of the future. This gives us unprecedented freedom and wealth, including the freedom to be completely wrong with (almost) impunity. So maybe we are living in the golden age *now*?

An analogy would be the demographic transition. In the old days, mortality and fertility were high. Then better medicine and lifestyle arrived, and mortality declined. The result was a transition through a state of high fertility and low mortality, until fertility began to decrease. The result is of course a major shift in human societies - for a while there is extra people in working age, the range of possible life project changes, time discounting changes, the timing of inheritance changes, and so on.

Although Robin seems pretty happy with this scenario many others seem to have trouble with it on various grounds:

Some reject it because they think speaking about far-future humanity is impossible: crucial limitations such as economics or lightspeed will be abolished eventually. Another possibility is that humans will change themselves so the predictions are irrelevant. But while long-term predictions are obviously vulnerable, stuff like thermodynamics, information limits, lightspeed, scale separation and economics might be pretty robust. And while we may have little to say about what is actually going on and being valued, we might say a bit about the low-level stuff.

Some reject it because they think speaking about far-future humanity is impossible: crucial limitations such as economics or lightspeed will be abolished eventually. Another possibility is that humans will change themselves so the predictions are irrelevant. But while long-term predictions are obviously vulnerable, stuff like thermodynamics, information limits, lightspeed, scale separation and economics might be pretty robust. And while we may have little to say about what is actually going on and being valued, we might say a bit about the low-level stuff.

Many seem to mistake Robin's position as saying that in 10,000 years there will be an enormous population or value per atom. This is a misreading: the absurd values only show up if one assumes economic growth to continue without limit. Robin argues that eventually the exponential growth of humans and economy will taper off because of limited stuff and limited complexity of the laws of physics. Not everybody agrees that a virtual economy needs to have its economic growth bounded by the amount of matter to build the base level hardware: clearly we can value a particular atom nearly arbitrarily high (e.g. if it was the key to the survival of all of humanity), and cultural products of relatively low information content can gain tremendous economic (and perhaps hedonistic value) - just consider certain modern art or cartoons. But a finite amount of stuff still means a finite amount of memory and value-experiencing machinery.

Another possibility is that humans or their descendants will coordinate their growth so that it is slower than the growth of available stuff, producing an ever richer population. But then we better do that very soon, because within a short (by astronomical, biological and macrohistorical standards) time span we will likely spread over distances where coordination is hard to re-achieve.

It seems that his argument is vulnerable (insofar anything is vulnerable) to the Doomsday argument: why should we find ourselves here at the unusual transition rather than among the teeming masses of posthumans of the endless future? There are two unsettling potential non-extinction answers: one is that the transition also means the end of conscious observers, or that we are living in a simulation.

It seems that his argument is vulnerable (insofar anything is vulnerable) to the Doomsday argument: why should we find ourselves here at the unusual transition rather than among the teeming masses of posthumans of the endless future? There are two unsettling potential non-extinction answers: one is that the transition also means the end of conscious observers, or that we are living in a simulation.

The first option would correspond to Nick's scenario of mindless outsourcers or perhaps the galactic locust swarm of Robin's burning the cosmic common scenario - we evolve into a non-conscious but evolutionarily successful form. The reason we find ourselves here is simply that most observers will exist during the lead-up to the non-observer posthuman phase (and not during the long period of low population before modernization).

The second option would be that Nick's simulation argument is true: most observers do exist within the teeming posthuman future, but many are deluded about the actual time because they live in history simulations. In fact, Robin's blog makes this even more plausible by arguing that our era, for various reasons, is the most interesting and mythical era to the posthumans. We are living in their dreamtime, so of all eras to make simulations of, this is one of the most popular. It is/was not just an era of unprecedented wealth, but also a lot of drama, bizarre beliefs and mental states, important decisions that shaped the modern posthuman world.

So, if you buy Robert's argument *and* the doomsday argument, you should expect to be among the last "real humans" before we turn into posthuman goo, or that you live in a historical recreation. If you buy the doomsday argument but do not believe in Robin's argument, then there are a few other options:

- The vanilla doomsday scenario.

- The future posthumans are a single group-mind, counting as just one observer.

- We develop coordination enough to keep our posthuman population very small (and rich).

- Posthumans are not in our reference class.

- Various vanilla escapes: not all humans are in your reference class, there are alien civilizations in your reference class etc.

Personally, I find Robin's argument intriguing. I'm not quite as horrified as many seem to be by the claim that in the future almost everybody will be very poor. After all, being poor in a western society today actually means a far higher standard than most of our ancestors could achieve. It is not inconceivable that we would envy desperately poor posthumans if we knew about their situation.

It has slightly increased my belief in the chance that this is a simulation, since the world seems almost suspiciously interesting. However, Freeman Dyson suggested a curious possibility: what if we are living in the most interesting of all possible worlds? If that turned out to be true, then I would expect the tapering off of discovery Robin is predicting will not happen, and the future would be even more inhomogeneous and confusing than we could possibly imagine.

Upload ethics

I have been interviewed by David Orban about the ethics of whole brain emulation, as a preparation for the Singularity Summit.

Of course, this is just a very brief interview about a subject that deserves a much, much longer talk - handling moral uncertainty about the status of software, the ethics of finding uploading volunteers, the vulnerability of uploads, the social impact, the ethics of splitting, not to mention brain editing. A paper from some of us FHI people should show up eventually, we have been brainstorming for a while.

I look forward to do more interviews with David. This one was fun!

September 27, 2009

The crossover to leave all crossovers in the dust

Sometimes fanfic goes off the *really* deep end. Eliezer Yudkowsky's The Finale of the Ultimate Meta Mega Crossover is a great Vernor Vinge and Greg Egan fanfic.

Sometimes fanfic goes off the *really* deep end. Eliezer Yudkowsky's The Finale of the Ultimate Meta Mega Crossover is a great Vernor Vinge and Greg Egan fanfic.

As one of the commenters on lesswrong said:

"But yeah, that story was fun. As delightfully twisted as Fractran. (Yes, I am comparing a story to a model of computation. But, given the nature of the story, is this not perfectly reasonable? :))"

My favourite line is probably: "You had a copy of my entire home universe?"

Overall, the big question is of course where the Born probabilities come from. And people say fanfic is just mindless fun.

September 24, 2009

Neurosport

Practical Ethics: Supercoach and the MRI machine. A little thought experiment about using neuroscience to improve coaching, a response to some of the issues mentioned by Judy Illes.

Practical Ethics: Supercoach and the MRI machine. A little thought experiment about using neuroscience to improve coaching, a response to some of the issues mentioned by Judy Illes.

In fact, a software "supercoach" would probably be most important outside sports. Imagine having a personal coach helping you to maximize your performance in education, your job, love or cooking. Of course, many activities do not admit a nice success measure, but if we can (and this is so far just a hope) identify high motivation states and what training actually works, then we could likely enhance a lot of areas. But it is going to take more than good MRI for this: we need to actually monitor and map out motivation, behaviour and training responses for a lot of areas. That will likely prove both slow and expensive.

Be the best uncle you can be!

Mind Hacks has a great characterization of the transhumanist community:

Transhumanists are like the eccentric uncle of the cognitive science community. Not the sort of eccentric uncle who gets drunk at family parties and makes inappropriate comments about your kid sister (that would be drug reps), but the sort that your disapproving parents thinks is a bit peculiar but is full of fascinating stories and interesting ideas.

That is exactly the kind of uncle I try to be.

September 23, 2009

The hot limits to growth

Over at Overcoming Bias Robin is making the argument that economic growth will eventually be limited: even by expanding at lightspeed the amount of amount of matter we can turn into something valuable will only grow as the cube of time, but any positive exponential economic growth rate will outrun this sooner or later. Others are not so convinced, arguing that the value is not strictly tied to matter but by patterns of matter, possibly making it possible to have an exponential growth of value even when matter doesn't accumulate fast enough.

Over at Overcoming Bias Robin is making the argument that economic growth will eventually be limited: even by expanding at lightspeed the amount of amount of matter we can turn into something valuable will only grow as the cube of time, but any positive exponential economic growth rate will outrun this sooner or later. Others are not so convinced, arguing that the value is not strictly tied to matter but by patterns of matter, possibly making it possible to have an exponential growth of value even when matter doesn't accumulate fast enough.

This got me thinking of the thermodynamic problems of an expanding supercivilization, trying to turn cosmic matter into something carrying value.

A civilization expanding at velocity v controls a spherical volume V = 4pi(vt)^3/3 at time t, containing M = rho V units of mass. Either information is encoded in matter, in which case the maximal amount of information I the civilization can have is Imax = kM = (4 pi k rho/3) v^3 t^3 (the "classical case") or it is bounded by the Bekenstein quantum gravity bound, giving I = kMR = (4 pi k rho/3) v^4 t^4 (the "quantum case").

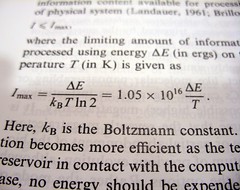

Expanding means that it takes disorganized matter and turns part or all of it into information storage. Disregarding any energy cost of doing this there is still an entropy cost that has to be paid due to the Brillouin inequality: every bit of matter has to be set to a certain value, erasing at least one bit of information. This produces an energy cost of P = kb T ln(2) I' that has to be dissipated as waste heat. In the classical case P = [4 pi k rho kb T ln(2)] v^3 t^2 and in the quantum case P = [16 pi k rho kb T ln(2) / 3] v^4 t^3.

Expanding means that it takes disorganized matter and turns part or all of it into information storage. Disregarding any energy cost of doing this there is still an entropy cost that has to be paid due to the Brillouin inequality: every bit of matter has to be set to a certain value, erasing at least one bit of information. This produces an energy cost of P = kb T ln(2) I' that has to be dissipated as waste heat. In the classical case P = [4 pi k rho kb T ln(2)] v^3 t^2 and in the quantum case P = [16 pi k rho kb T ln(2) / 3] v^4 t^3.

(Note that I assume the civilization does not incur any thermodynamic operating costs. It runs all its computations as environment-friendly reversible computations etc.)

This waste heat has to be radiated away somewhere. Plugging this into Stephan-Boltzmann's law gives P = 4 pi sigma T^4 v^2 t^2. In the classical case this gives T = {[k rho kb ln(2) / sigma] v}^(1/3). The temperature of the civilization grows as the cube root of the expansion velocity. The quantum case on the other hand produces T = {[4 k rho kb ln(2) / 3 sigma] v^2 t}^(1/3) Here the temperature rises with the cube root of time and the 2/3rd power of velocity: the civilization will have to slow down to avoid overheating just from its own expansion.

Plugging in numbers, the constant in the brackets in the quantum case is 8.5*10^8 K^3 s / m^2. For lightspeed expansion this produces a temperature growing as 4.25*10^8 t^(1/3) - it would look like a supernova. Not terribly likely. Assuming that the civilization wants to keep a temperature of a few (lets say 100) Kelvin instead (allowing me to ignore cosmic background radiation) gives an expansion rate of v = 0.0343/sqrt(t) m/s. That civilization will creep forward at a literal snails pace - the entropic cost of converting matter into the maximal information density is simply astronomical.

Plugging in numbers, the constant in the brackets in the quantum case is 8.5*10^8 K^3 s / m^2. For lightspeed expansion this produces a temperature growing as 4.25*10^8 t^(1/3) - it would look like a supernova. Not terribly likely. Assuming that the civilization wants to keep a temperature of a few (lets say 100) Kelvin instead (allowing me to ignore cosmic background radiation) gives an expansion rate of v = 0.0343/sqrt(t) m/s. That civilization will creep forward at a literal snails pace - the entropic cost of converting matter into the maximal information density is simply astronomical.

Ok, what about the classical civilization? Let's assume that it stores one bit per hydrogen atom mass, making k 6*10^26 bits/kg. That gives a temperature of 0.00246 v^(1/3) K. For lightspeed expansion I get a mere 1.6479 K - so little that cosmic background radiation clearly overshadows it (and a more complex radiation model is needed).

The moral seems to be that ordinary supercivilizations can expand at a brisk pace, but civilizations near the limits of quantum gravity are terribly constrained by the laws of thermodynamics. Not to mention that if they mess up, their dense information storage and processors could of course implode into black holes. Speaking of which, this is probably how such a civilization would actually cool itself. Just create sufficiently big holes to have a very low Hawking temperature, and dump your entropy in there (a Wei Dai inverse Dyson sphere).

Of course, if the civilization actually dissipates energy inside its volume it is in more thermodynamic trouble. This is likely, since it seems unlikely that *all* processes an advanced civilization would engage in are perfectly reversible. Error correction of bits that flip due to thermal noise or other causes requires paying entropic costs, and even communication and sensing have entropy costs.

Of course, if the civilization actually dissipates energy inside its volume it is in more thermodynamic trouble. This is likely, since it seems unlikely that *all* processes an advanced civilization would engage in are perfectly reversible. Error correction of bits that flip due to thermal noise or other causes requires paying entropic costs, and even communication and sensing have entropy costs.

But while the civilization volume grows as the cube of time (and hence the amount in need of dissipation) the area grows as the square of time - the amount of cooling area per cubic meter of civilization is decreasing as 1/t. The civilization either has to heat up (more bit errors to fix), slow down its activity or use black hole cooling.

Ten sigma: numerics and finance

I was recently asked "What is the likelihood of a ten sigma event?"

I was recently asked "What is the likelihood of a ten sigma event?"

For those of you who don't speak statistical jargon, this is a random event drawn from the Gaussian distribution that happens to be more than ten standard deviations ("sigmas") away. 68% of events go outside one sigma, just 5% beyond 2 and a mere 0.3% reach beyond 3. Ten sigma is very, very unlikely - but how unlikely?

Numerics

There are plenty of calculators online that allows you to calculate this, but most turn out to be useless since they don't give you enough decimals: the answer becomes zero. Similarly there are tables in most statistical handbooks, but they usually reach at most to 6 sigma.

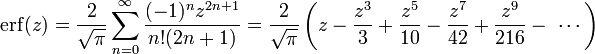

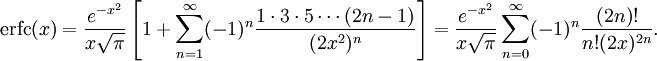

OK, but how do you calculate the probability of n sigma events? The answer is deceptively simple: the probability is 1-erf(n/sqrt(2)). Erf is the error function,

![]()

It is one of those functions that can only be written in terms of integrals and tend to be called "special" or "non-elementary". Erf is a pretty common special function.

I of course opened my trusty Matlab and became annoyed. Because it only reached to 8 sigma. Beyond this point the difference between 1 and erf(x) became so small that the program treated it as zero. This is called cancellation, and is a standard problem in numerical analysis.

OK, I needed to either improve Matlab precision (doable using some add-on libraries, but annoying) or to calculate 1-erf(x) in a better way. Looking at the Wikipedia page I saw a juicy Taylor series that I stole:

Unfortunately this requires a lot of terms before it converges. For x=10, the terms become hideously large (the hundredth term is 10^40) before declining *very* slowly. Worse, Matlab cannot handle factorials beyond 170!, so the calculation breaks down after the first 170 terms without converging.

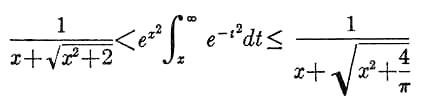

The next formula I tried was an asymptotic formula for erfc(x), the complementary error function which happens to be 1-erf(x) (given how important Gaussian distributions are, it is not that unexpected that this useful sibling of erf got its own name despite being just a flipped version).

I don't like asymptotic series. For any given x, if you add enough terms you will get a great approximation... but beyond that, if you add more terms the series diverge. I have never really gotten my head around them, but they can be useful. Except in this case I got a suspicion that the answer (7.62*10^-24) was wrong - but how could I check it?

At this point I remembered to turn to The Good Book. The Big Blue Book to be exact, Abramowitz and Stegun's Handbook of Mathematical Functions. As a kid I was addicted to cool formulas, and when I found a copy of this volume in the cellar of Rönnells used book store in Stockholm I used most of my savings to buy it. Yes, most was completely useless tables of Bessel functions or even random numbers, but it also contained pages filled with the strangest, most marvellous analytical expressions you could imagine!

In any case, looking on page 298 I spotted something promising. Equation 7.1.13 shows that erfc lies between two pretty modest-looking functions:

If this worked, it be possible to calculate bounds on 1-erf(10/sqrt(2)). But would it work without cancellation or other nasty numerics? I plotted the three functions side by side... and the curves nearly coincided perfectly! The formula is a very decent approximation when x is above 3.

Finally I could calculate my 10 sigma probability. As the table shows the Matlab implementation of the erf function starts misbehaving even at n=8, when it crosses the lower bound.

| n | Lower bound | 1-erf(n/sqrt(2)) | Upper bound |

| 1 | 2.990923*10^-1 | 3.173105*10^-1 | 3.356963*10^-1 |

| 2 | 4.472758*10^-2 | 4.550026*10^-2 | 4.737495*10^-2 |

| 3 | 2.683711*10^-3 | 2.699796*10^-3 | 2.770766*10^-3 |

| 4 | 6.318606*10^-5 | 6.334248*10^-5 | 6.444554*10^-5 |

| 5 | 5.726320*10^-7 | 5.733031*10^-7 | 5.802696*10^-7 |

| 6 | 1.971960*10^-9 | 1.973175*10^-9 | 1.990693*10^-9 |

| 7 | 2.558726*10^-12 | 2.559619*10^-12 | 2.576865*10^-12 |

| 8 | 1.243926*10^-15 | 1.221245*10^-15 | 1.250748*10^-15 |

| 9 | 2.256867*10^-19 | 2.266717*10^-19 | |

| 10 | 1.523831*10^-23 | 1.529245*10^-23 |

So there we are: ten sigmas occur with probability 1.5265*10^-23 (plus or minus 2.7*10^-26). My asymptotic calculation was 50% off! Of course, if I really needed to trust the result I would have done some extra checking to see that there were no extra numerical errors (dividing by the functions above produces 1, so here doesn't seem to be any cancellation going on).

It should be noted that the above table is the probability of an outcome being more than 10 sigmas above or below the mean. If you are interested in just outcomes going 10 sigma high, then it is half of these numbers.

Finance

Here at FHI we actually care about ten sigma events. Or rather, what apparent ten sigma events can tell us about the correctness of models people make. Consider this quote:

According to their models, the maximum that they were likely to lose on any single trading day was $45 million—certainly tolerable for a firm with a hundred times as much in capital. According to these same models, the odds against the firm's suffering a sustained run of bad luck—say, losing 40 percent of its capital in a single month—were unthinkably high. (So far, in their worst month, they had lost a mere 2.9 percent.) Indeed, the figures implied that it would take a so-called ten-sigma event—that is, a statistical freak occuring one in every ten to the twenty-fourth power times—for the firm to lose all of its capital within one year.R. Lowenstein, "When Genius Failed: The Rise and Fall of Long-Term Capital Management," Random House, 2001, pp. 126–127.

(I lifted the quote from The Epicurean Dealmaker, who quite rightly back in 2007 pointed out that people had not learned this lesson. there is also this alleged 23-sigma event - the probability for that in a Gaussian distribution is 4.66*10^-177.)

Whenever a model predicts that something will fail with a negligible chance, the probability that the model can fail becomes important. Quite often that probability overshadows the predicted risk by many orders of magnitude. Making models robust is much more important than most people expect. (this was the point of our LHC paper) In the case of finance, it is pretty well understood (intellectually if not in practice) that the heavy tails produce much more big events than the Gaussian model predicts. When we see a 10-sigma it is a sign that our models are wrong, not that we have been exceedingly unlucky.

This is true for mathematics too. While math is consistent and "perfect" (modulo Gödel), our implementations of it - in brains or software - are essentially models too. The chance that any math function is badly implemented, wrongly implemented or has numeric limitations is pretty high. One should always check correctness to the extent the cost of error times its likelihood suggests. A bit of cancellation might not matter in the computer game, but it does matter a lot when launching a space probe.

23 Sept 2009 Update: Toby Ord kindly pointed out a miscalculation of the error of the asymptotic series. One should never math blog after midnight. The real error is also very close to 50%, so is likely that it was due to a missed factor of 2 somewhere. See what I mean about the likelihood of errors in human math implementations?

24 Sept 2009 Update: Checking the result in Mathematica with a high precision setting, I get the ten sigma probability to be 1.523970*10^-23, which is inside the confidence interval.

September 22, 2009

My soul is open source

My soul, or rather an applet of it, can be seen here.

My soul, or rather an applet of it, can be seen here.

The origin was an art project from Information is Beautiful, where the blog asked for crowdsourced artworks depicting the originators' souls. Since I believe my core processes are self-organizing emergent phenomena I decided to make a flocking system, where words taken from this blog swim around forming temporary clusters. I based it on Daniel Shiffman's Processing example, adding mostly colour management.

It might be nothing more than animated personalised fridge magnet words, but I find it relaxing to watch.

September 21, 2009

Life extension model

Dirk Bruere on the Extrobritannia mailing list asked a provocative question:

Any serious H+ predictions of longevity trends between now and (say) 2050 for various age groups? I would expect our predictions to start to deviate from the "official" ones at some point soon.

This led me to develop a simple model of life extension demographics. I'm not a professional demographer and it depends on various assumptions, so take this with a suitable amount of salt.

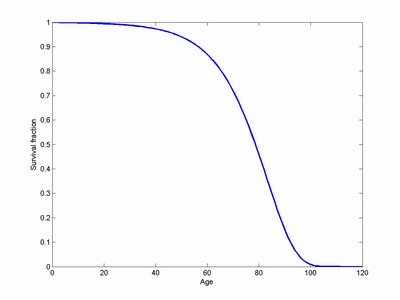

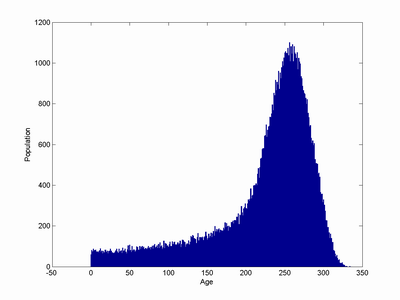

Summary of my results: I do not see any unexpected demographic changes before life extension breakthroughs, and age at death will not rise until a while after - despite potentially extreme rises of cohort life expectancy. (Flickr photostream) I also think we 30+ transhumanists should be seriously concerned about speeding basic and transitional research, and look at alternative possibilities (cryonics, possibly WBE).

Model

The death rate is equal to min(1, 1/10000 + 10.^(agedam*3/80-4.2)) This is a Gompertz-Makeham law with a low age-independent component and an exponential component that is driven by ageing damage (agedam). Ageing damage is initially increasing at a rate of 1 per year. This model produces a passable survival curve with a life expectancy of 78.6 years.

Life extension takes the form of a reduction in the rate of age damage per year. This stretches the mortality curve. I assume that due to technology development it has a sigmoid form:

deltadamage = (1-.5*fixable)-fixable*.5*tanh((year-midyear -(1-wealth)*wealtheffect)/g)

where fixable is the fraction of age damage that can be fixed in the long run, midyear is the inflexion point of the sigmoid (50% of the possible reduction in age damage has been achieved) and g is a factor determining how rapidly the transition is from one unit of damage per year to 1-fixable units per year occur. For large g this is a slow transition, for small g this occurs quickly (as a rule of thumb, the "width" of the transition is 4g years).

To model technology diffusion and cost effects each individual has a wealth statistic uniformly distributed between 0 and 1, which determines (together with the parameter wealtheffect) how many years "behind" the individual is. A person with zero wealth will be wealtheffect years behind the technology curve.

Deaths are exactly balanced by an equal number of births. This corresponds to the assumption of a stable population. This is of course questionable, and I also get some problems when bumps of the population dies at about the same time.

100,000 individuals were simulated over 1000 years, with the midyear set to year 150. Data was collected on when individuals died enabling calculating the cohort life expectancy of their years of birth, as well as the average age at death in different years.

Scenario parameters

What are plausible values for fixable, g and wealtheffect?

In the case of fixable, this could range from 0 (no life extension is possible) to more than 1 (age reversal is possible). An optimistic scenario (radical life extension) assumes fixable is 1, a more sceptical scenario (moderate life extension) assumes it is 0.5.

g is even trickier to estimate, since it is a combination of scientific/medical progress and technology diffusion. It will depend on whether the treatment is simple like a vaccine (rapid diffusion) or requires extensive equipment and expertise like MRI (slow diffusion). Similarly, for ageing we have reason to believe that testing will be slow (biomarkers will only get us part of the way). If something like SENS is used the development might actually be something like the sum of 7 separate sigmoids, each fixing part of the problem, again broadening the curve. My optimistic scenario is that the transition takes ~15 years (g=4), comparable to the spread of ultrasound, CT scanners and hybrid seedcorn. A pessimistic scenario is more like the introduction of the car or flu vaccine, producing a ~50 year transition (g=12.5).

The effect of wealth is hard to model properly, especially since this will be strongly affected by societal choices. The optimistic model is that the difference between top and bottom will be on the order of 15 years (diffusion of modern gadgets; likely most plausible for simple intervention or with strong subsidies), the pessimistic model a 50 years interval. There is plenty of room here for further experiments, such as assuming that a certain fraction never adopts it (for wealth or ideological reasons), skew income distributions etc.

These assumptions provide us with 8 possible models, spanning the assumptions.

To apply the model on the real world, we should also take into account when the technology is "discovered". As a very rough estimate, it takes at least 10 years in the lab to produce something ready for clinical trials, which take 10 more years. So we should cautiously estimate that midyear occurs 25+2g years after the discovery. That is, 32.5 years for the optimistic scenario, and 50 years for the pessimistic one. This is used to set year 0 to the "discovery", allowing us to compare how quickly things change after it.

Simulation results

The overall behaviour of the model is simple: initially there is a steady state distribution corresponding to the pre-life extension status quo. As life extension arrives it begins to move right. The lower mortality produces lower birth rates, the population is dominated by a lump of older people who were lucky enough to get treatment in time. As the peak of "first immortals" marches onwards it gradually declines due to the constant mortality rate, but it remains significant for a very long time. Eventually the model converges to a very elongated exponential distribution entirely due to the constant mortality.

Calculating mean age at death in the model is simple, while cohort life expectancy only works up to the point where there are survivors at the end of the simulation. Hence for some of the simulations the life expectancy is only plotted up to the birth year of the first survivor. There is some oscillations in average age at death due to synchronized fluctuations of the population; these are artefacts due to the simplistic birth model (however, in a real demographic model they could also occur due to the simultaneous passage of large cohorts through the fertile age range).

Assuming fixable=0.5 produces a lifespan of ~140 years, while for fixable=1 it is 2000+ (my constant mortality rate should likely be higher to be plausible).

Flickr photostream of the scenarios.

The effect on real life expectancy (rather than estimated life expectancy) starts around a *lifetime* earlier than the midyear of the technology - it gives enough help to the already old when it arrives that they will survive further. More radical life extension has a quicker take-off. Note that this take-off occurs long before there is any noticeable effect on the average age at death: that only occurs after the technology has both matured and become widespread. This is

because the deaths during this intermediate period are largely due to people who never got life extension, while deaths after the technology is widespread have been influenced by its effects.

This implies that the discrepancy between current forecasts and reality may be far bigger than most people think, if we are right about the feasibility of radical life extension this century. It also shows that even if effective life extension is spreading in a society the demographic indicators will not react quickly to it.

In terms of prediction, this model suggests that we will not see any demographic changes before the breakthroughs, and age at death will not rise until long while after (roughly wealtheffect + 4g years). The true observables that predict a breakthrough will likely be in the form of labwork and clinical trials, not any demographics.

Guesses

What about our chances? It all depends on when we think the basic solutions are going to be discovered. In the earliest scenario (radical life extension, rapid tech improvement, little wealth effect) cohort life expectancy starts to increase 25 years *before* the discovery. In fact, at the time of discovery most people under 30 will be around for indefinite life extension. If the technology development is slower, then the cohort born at the same time as the discovery will "just" have about 150-230 years of life expectancy, depending on how fast it spreads in society.

If only mild life extension is possible people born at the discovery time still have more life expectancy than expected, although not as much as future generations will have. People under 50 will get some life extension if societal spread is fast, and the under 30s if it is slow.

My personal intuition is that we are not far from early research breakthroughs (they might have occurred already), so we might be somewhere around year -10 to 0. I end up with the general life extension social breakthrough somewhere 2040-2060. Great news for current kids, a bit more worrying for us at 30+.

An interesting implication is that pension funds and similar long term investments might be very relevant for us 30+ transhumanists - they might be what matters if we are to self-fund life extension in the early expensive days in order to squeeze past the bottleneck. Of course, there are other potentially powerful technologies that could arrive before or during this period (I would expect human whole brain emulation somewhere in the middle of it), but the prudent thing is to save up.

My model seems to be rather sensitive to transitional research speed - if we can speed that up, then we might gain more than just life extension, such as better medicine in general. Similarly, it might be rational from a kind of Rawlsian perspective to aim for rapid spread across society - most of us do not know how wealthy we will be in 50+ years (not to mention that we might actually care about other people... imagine that!)

Appendix: Matlab/Octave code

% Simple life extension model

N=100000; % population

maxyear=2000; % 2000 years of simulation

midyear=150; % When technology is at 50 percent

for fixable=0.5:0.5:1;

% how much age damage can be fixed

for g=4:8.5:12.5;

% how quickly transition pre and post

for wealtheffect = 15:35:50; % how many years after is low-wealth people?

clf

% Generate initial age distrivution

age=zeros(N,1);

for i=1:N

age(i)=ceil(rand*100);

while (rand>prod(1-mortality(1:age(i))))

age(i)=ceil(rand*100);

end

end

hist(age);

born=-age;

agedam=age;

wealth=rand(N,1);

meanagedeath=zeros(maxyear,1);

lifetable=[];

for year=1:maxyear

age=age+1;

agedam=agedam + ((1-.5*fixable)-fixable*.5*tanh((year-midyear - (1-wealth)*wealtheffect)/g));

% age damage initiall incs as 1 per year, then sigmoid decline

% tech used based on wealth

mortality = 1/10000 + 10.^(agedam*3/80-4.2);

mortality=(mortality<1).*mortality+(mortality>=1);

dies = rand(N,1)

lifetable=[lifetable; year*ones(length(find(dies)),1) born(dies)];

% Births

% Assumes replacement births keeping population constant

age(dies)=0;

agedam(dies)=0;

wealth(dies)=rand(length(find(dies)),1);

born(dies)=year;

% Plot population every century

if (rem(year,100)==0)

hist(age,0:maxyear)

drawnow

end

end

% Calc cohort life expectancy

lifexpect=zeros(maxyear,1);

for year=1:maxyear

X=find(lifetable(:,2)==year);

lifexpect(year)=mean(lifetable(X,1)-lifetable(X,2));

end

% Plot everything

% everything scaled so that year 0 is "discovery"

xtrans = 150-(25+2*g);

clf

year=1:maxyear;

lim=maxyear-max(age);

plot(year(1:lim)-xtrans, lifexpect(1:lim),'LineWidth',2)

hold on

plot(year-xtrans, meanagedeath,'g','LineWidth',2)

plot(year-xtrans,50*(.5-fixable*.5*tanh((year-midyear - (1-1)*wealtheffect)/g)),'r','LineWidth',2)

plot(maxyear-max(age)*[1 1]-xtrans,[0 max(lifexpect(1:lim))],'b:','LineWidth',2)

plot(midyear*[1 1]-xtrans,[0 50],'r:','LineWidth',2)

axis([-50 300 0 300])

xlabel('Year after discovery')

ylabel('')

legend('Cohort life expectancy','Average age at death','Age damage/year','First survivor','midyear')

title(sprintf('fixable=%2.2f, g=%2.2f, wealtheffect=%2.2f',fixable,g,wealtheffect))

plot(year-xtrans,50*(.5-fixable*.5*tanh((year-midyear - (1-0.5)*wealtheffect)/g)),'r--','LineWidth',2)

plot(year-xtrans,50*(.5-fixable*.5*tanh((year-midyear - (1-0)*wealtheffect)/g)),'r--','LineWidth',2)

% Save picture

print('-dpng',sprintf('F%2.2fG%2.2fW%2.2f.png',fixable,g,wealtheffect))

end

end

end

September 20, 2009

Postcoded maps

UK postal codes have always fascinated me, with their rich alphanumeric structure. Now I got the chance to play around with their geography.

Plotting their latitude/longitude (or in this case, my approximation to a map projection in km) produces a nice density map:

Zooming in reveals what looks like a self-similar fractal structure:

(at least in areas where geography does not strongly constrain, such as the south Wales valleys). Estimating the postcode density and plotting a cumulative distribution suggests that there is a mix of two power laws, one for rural areas and one for urban areas.

I also had some fun making a Voronoi diagram based on the code locations. Here is Oxford:

September 17, 2009

Seeing the colors of darkness

By now everybody will have heard the news, Technology Review: Color-Blind Monkeys Get Full Color Vision (original paper Katherine Mancuso, William W. Hauswirth, Qiuhong Li, Thomas B. Connor, James A. Kuchenbecker, Matthew C. Mauck, Jay Neitz & Maureen Neitz, Gene therapy for red–green colour blindness in adult primates, Nature advance online publication 16 September 2009 doi:10.1038/nature08401). The nice thing about this result is that it is gene therapy in adults rather than done in the embryonic state (like the knock-in mice of Jacobs et al). Here the new pigment was expressed in cones already doing vision and connected into the colour-processing pathways, but the new kind of signals caused plastic change in the processing so that they could distinguish between the new colours. I wonder how they experienced the difference?

By now everybody will have heard the news, Technology Review: Color-Blind Monkeys Get Full Color Vision (original paper Katherine Mancuso, William W. Hauswirth, Qiuhong Li, Thomas B. Connor, James A. Kuchenbecker, Matthew C. Mauck, Jay Neitz & Maureen Neitz, Gene therapy for red–green colour blindness in adult primates, Nature advance online publication 16 September 2009 doi:10.1038/nature08401). The nice thing about this result is that it is gene therapy in adults rather than done in the embryonic state (like the knock-in mice of Jacobs et al). Here the new pigment was expressed in cones already doing vision and connected into the colour-processing pathways, but the new kind of signals caused plastic change in the processing so that they could distinguish between the new colours. I wonder how they experienced the difference?

In discussing this with transhumanist friends we immediately looked at the potential of not just becoming tetrachromats (which would increase chromatic discrimination) but extending the visual range by expressing some opsins sensitive to longer or shorter wavelengths. There are clear limits to this: due to the lens and vitreous humor being UV-opaque below ~300 nm we can only see near UV, and real "heat vision" for seeing other mammals in the dark will be hard to achieve given that the interior of our eyes is shining with the same IR wavelengths. Besides, the water will likely absorb IR beyond ~1500 nm (see this diagram of eye transmittance).

This discussion led me to look up one of those weird old research strands: replacing vitamin A in food with retinoids intended to change spectral sensitivity. Thanks to Edward Keyes page I found these two papers:

Yoshikami, Pearlman, and Crescitelli, Visual pigments of the vitamin A-deficient rat following vitamin A2 administration, Vision Research 9:633-646 1969. This demonstrates that that rats with a diet deficient in vitamin A but supplemented with A2 (3-dehydroretinol) did get different retinal pigments, with sensitivity shifted redward by 20 nm.

Ernest B. Millard and William S. McCann, Effect of vitamin A2 on the red and blue threshold of fully dark adapted vision, Journal of Applied Physiology 1:807-810 1949 This paper did this experiment on humans back in the 40's, noticing a slight increase in sensitivity.

However, as Riis points out, going from A1 to A2 in humans would just bring down our sensitivity peak from 565 nm to 625 nm. Not an enormous improvement! But we would be able to see a bit more of the very near infra-red.

It would be really interesting to be able to see so far down in IR (> 5000 nm) that one could tell the chemical differences between substances by doing visual IR spectroscopy. "Oh, that looks like it has a lot of trans-disubstituted alkenes!" But we need very different kinds of eyes to do it. It is not enough to tweak them slightly.

September 16, 2009

Getting rid of science noise

I dare to disagree with Ben Goldacre (I think): Practical Ethics: Academic freedom isn't free - I look at the debate over the aids-denialism papers in Medical Hypotheses. My conclusion is broadly that while the journal is filled with junk, it is a good thing that it exists. The way of dealing with bad science is open criticism, scientific connoisseurship and developing technical tools for supporting rational debate. It is simply not effective to try to suppress error at one single stage.

I dare to disagree with Ben Goldacre (I think): Practical Ethics: Academic freedom isn't free - I look at the debate over the aids-denialism papers in Medical Hypotheses. My conclusion is broadly that while the journal is filled with junk, it is a good thing that it exists. The way of dealing with bad science is open criticism, scientific connoisseurship and developing technical tools for supporting rational debate. It is simply not effective to try to suppress error at one single stage.

Here is a tool that I think should be developed: a support/rebuttal detector. Parsing scientific papers to see whether they actually rebut the science in another paper is AI-complete. However, it is likely feasible given the standardized style of scientific communication to do something like sentiment detection to make a probabilistic estimate of whether a text claims to rebut or support another text. Add this to large scientific publication databases, and make a rottentomatoes.com-like composite index that shows up next to PubMed searches or bibliographies (or even, if activated, as mouseover indicators for references in the text itself). I think this would be tricky but feasible, and even if it would just give a probabilistic measure of support it would still help judging papers.

The next step is of course to make this work for all sorts of claims.

September 14, 2009

I like to play in the utility fog

Compile-A-Child drawings by Shane Hope is one of the cutest depictions of a transhuman future I have ever seen.

September 13, 2009

Periodicity

Database of Periodic Tables is a wonderful list of periodic tables. No, it is not a periodic table of them, although there are many regularities between the attempts to organise the elements "better". It is so annoying that the underlying patterns of the elements, despite following simple rules, never seem to turn into a perfectly regular diagram that also shows useful properties.

Database of Periodic Tables is a wonderful list of periodic tables. No, it is not a periodic table of them, although there are many regularities between the attempts to organise the elements "better". It is so annoying that the underlying patterns of the elements, despite following simple rules, never seem to turn into a perfectly regular diagram that also shows useful properties.

September 12, 2009

Transfascists have the coolest uniforms

Charles Stross blogged about Chrome Plated Jackboots. His basic argument is that while environment, IP, privacy or biotechnology might provide fodder for 21st century politics, they are not really the big important drivers of the new ideologies we are going to see. That is a bold and interesting prediction. He instead thinks that the product of transhumanism, the ability to actually change human nature, is going to be the explosive force. If you can change human nature, what should you change it to? That can enable many ethical and political views that currently are utterly unthinkable. Even if you cannot (yet) change humans, the widespread belief that it is possible would support many possible programs - some of which no doubt will be deeply misguided.

Charles Stross blogged about Chrome Plated Jackboots. His basic argument is that while environment, IP, privacy or biotechnology might provide fodder for 21st century politics, they are not really the big important drivers of the new ideologies we are going to see. That is a bold and interesting prediction. He instead thinks that the product of transhumanism, the ability to actually change human nature, is going to be the explosive force. If you can change human nature, what should you change it to? That can enable many ethical and political views that currently are utterly unthinkable. Even if you cannot (yet) change humans, the widespread belief that it is possible would support many possible programs - some of which no doubt will be deeply misguided.

Charles main worry is with fascism, and he lunges at what he considers to be a nascent hybrid between transhumanism and Italian fascism. As he points out, he is less worried about transhumanists becoming fascists (we are after all pretty marginal) but fascists getting transhumanist. It doesn't take a genius to realize that the basic fascist mindset combined with the right technology could become very nasty.

During the 2008 workshop on global catastrophic risks in San Jose George Dvorsky talked about what he called "hard totalitarianism". What we have seen so far is "soft totalitarianism", attempts at controlling citizens limited by technology and especially human nature. Hard totalitarianism would involve actually succeeding in this. Hard totalitarianism might turn out to be less bloody than soft totalitarianism, but far more crushing of the human potential.

Stross links to Umberto Eco's very readable essay Eternal Fascism: Fourteen Ways of Looking at a Blackshirt which is a nice look at what he calls ur-fascism, the kind of core found in all fascist-like worldviews. ("combining Saint Augustine and Stonehenge -- that is a symptom of Ur-Fascism" :-)

Reading through his analysis suggests where most modern transhumanists completely diverge from fascism. We break with the cult of tradition, we embrace modernity, we try to keep a critical mindset and we embrace diversity. In fact, the kind of transhumanism I regard myself as part of - very much "classical extropianism" - makes a virtue of having a diverse, bickering, self-organising system where knowledge is built in evolutionary ways based on empirical findings rather than handed down wisdom or rationalistic arguments (sure, I trust deductions in physics and math, but only because the empirical evidence for their reliability - I would not be as trusting of deductions in social science). Similarly it recognizes the diversity of potential human motivations and aims, which implies a need for great freedom to develop them if happiness is to be fully realized. This is why it promotes open societies - they are self-correcting and allows individual freedom. It doesn't have to assume the equal value of everybody, just that this value may well be incommensurable.

One of the most intriguing comments in Eco's essay is "In such a perspective everybody is educated to become a hero." One of the appeals of fascism today may be that it actually promises to return our heroes to us - the postmodern scepticism of heroism is deeply counter to a very human impulse to glorify exceptional people. It also reminded me of a scenario I was trying to use in a science fiction role-playing game a while ago:

The use of MPT to treat criminals started with “conscience transplantation” to psychopaths: they were given moral safeguards they previously lacked. This proved so successful that other moral therapies were developed to rehabilitate criminals. The first therapies were crude sets of rules, but as the technology advanced and the prisons were emptied the patterns became increasingly complex and ideal moral systems, adding not just morality but rehabilitative outlooks and personality traits.While many people took full advantage of MPT enhancement to give themselves positive traits and subdue or erase negative traits, Character Enhancement Therapy (CET) started out slowly. At first seen only as a treatment of criminals it soon proved its worth as many rehabilitated people demonstrated great feats of courage, dedication and other admirable qualities. In some positions such therapied and hence certifiably ethical persons were more sought after than normals. As a response both to the demand for ethics and the inspiration of many therapied examples more and more people began CET in earnest. Beyond a certain point CET became seen as necessary as proper education, a guarantee of moral health and good citizenship. While never strictly mandatory everybody got it.

As the CET wave swept society, it induced a near-total moral conformity. While a few basic character templates existed they all emphasised pro-social values and the desire to support the shared culture. Political and cultural constraints dissolved and the Federation was formed. Within the Federation everybody sought only the highest moral excellence (as defined by the Federation character templates), producing a highly efficient, courteous, bold and utopian society. Individuality was not abolished and disagreements still possible, but the shared moral spine made trust and cooperation the default.

Mental politics within the Federation is mostly about how to update the shared character templates. Different ideas, versions and theories are carefully studied before they are included in the monthly citizen patches. In general the public wants the federation to promote greater excellence, and the Federation tries to oblige.

(compare to Greg Bear's novel suite Queen of Angels, Slant and Moving Mars)

Note that in this scenario we end up with something that might not just be compatible with much of ur-fascism, but actually takes it much further than the flawed forms discussed by Stross and Eco. Why does fascism assume different values of different people? If Eco's model is right, it is because it acts as a driver for the ideology (social frustration), because it stabilizes society and because it is the only practical possibility. But the Federation can be totally egalitarian. One could imagine a Federation inhabited with total Kantians, all recognizing human dignity and equal value but still pushing outwards with zeal. It might not be elitist. It might eschew populism and newspeak. What makes it fascist is not that morality is modified (remember, this is done on a voluntary basis) but that it converges towards an identical morality driven by social pressures.

Note that in this scenario we end up with something that might not just be compatible with much of ur-fascism, but actually takes it much further than the flawed forms discussed by Stross and Eco. Why does fascism assume different values of different people? If Eco's model is right, it is because it acts as a driver for the ideology (social frustration), because it stabilizes society and because it is the only practical possibility. But the Federation can be totally egalitarian. One could imagine a Federation inhabited with total Kantians, all recognizing human dignity and equal value but still pushing outwards with zeal. It might not be elitist. It might eschew populism and newspeak. What makes it fascist is not that morality is modified (remember, this is done on a voluntary basis) but that it converges towards an identical morality driven by social pressures.

I'm not convinced this is an attractor state of all mind-hacking societies would end up in, but it shows that with sufficiently malleable humans entirely new technoideologies become possible. It is also worth considering whether we might not wish to partially go down this road in some limited domains - do we really wish to preserve human cruelty, and would it not be desirable to move to a heroic personality in times of real crisis? The means do not matter ethically as much as the fact that by assumption large groups of people can tune themselves. To some degree all societies attempt to impose socially accepted morals on their members, it is just that this process is very inefficient and limited. If it wasn't, then we would have potential to turn into the Federation.

But as the Federation emerges from a possibly quite liberal society and takes some of the fascist logic and turns it into something else, I also think we should expect many other possibilities - some likely very nice - of technoideologies that could emerge. Allen Buchanan did a Leverhulme lecture this spring where he suggested that enhancement may be almost a conservative duty. Transsocialists might follow From Chance to Choice in regarding enhancement as part of social justice - but in a transhuman knowledge society where transhuman capital is the important form of capital, why not try to liberate the knowledge workers further by giving them more control over the means of mental production? And translibertarians might argue that if we can remake ourselves, we might choose to become better at existing as independent rational agents than we currently are (last one to become Homo economicus is Pareto inefficient!). The potential for human nature politics looks vast, especially since it contains positive feedback loops back into the political system. I vote like I do because that is the kind of person I am - but if that personhood is affected by my votes?

But as the Federation emerges from a possibly quite liberal society and takes some of the fascist logic and turns it into something else, I also think we should expect many other possibilities - some likely very nice - of technoideologies that could emerge. Allen Buchanan did a Leverhulme lecture this spring where he suggested that enhancement may be almost a conservative duty. Transsocialists might follow From Chance to Choice in regarding enhancement as part of social justice - but in a transhuman knowledge society where transhuman capital is the important form of capital, why not try to liberate the knowledge workers further by giving them more control over the means of mental production? And translibertarians might argue that if we can remake ourselves, we might choose to become better at existing as independent rational agents than we currently are (last one to become Homo economicus is Pareto inefficient!). The potential for human nature politics looks vast, especially since it contains positive feedback loops back into the political system. I vote like I do because that is the kind of person I am - but if that personhood is affected by my votes?

September 10, 2009

Blue sky blueprints

New Scientist promotes Blueprint for a better world, ten radical ideas to improve the world.

- Evidence based politics

- Drug legalisation

- Keep everybody's DNA profile

- Measure non-wealth progress

- Research into geoengineering

- Real carbon taxes

- Use genetic engineering

- Stop the current ocean harvesting

- Pay people for uploading green power onto the grid

- Four day work weeks

I think some of these are very sensible (like shifting to a harm reduction approach for drugs, doing proper geoengineering, use of genetic engineering, sensible ocean management and to price carbon realistically). The big problem is that many of the improvements are based on very strong assumptions about the government - universal DNA databases are perfectly OK if police can be trusted and kept in check. Evidence based policy is a great idea, but would it really be implemented by a self-serving administrative class? And the four-day week seems to assume that everybody would be doing the same everywhere, within a western-european welfare state. I think it is more likely that we are going to see people having even more different lengths of their work-weeks, especially since most creative jobs already have ill-defined weeks.

Still, as utopianism goes this list is pretty good. Many of the entries look eminently doable or at least possible to implement partially to get information.

I would probably add methods of improving government transparency and accountability to the list. The greatest threats to human flourishing today seem to come directly or indirectly from bad governance, corruption and closed societies. Finding ways to overcome that would do a great deal of improving the ability to fix other problems. We can start at home by demanding greater transparency and a reciprocal increase of accountability for every proposed privacy-intrusion. We can develop software tools to sustain societal transparency both by automatically documenting what is being done (imagine a public database tracking all expenses and resources, possible to mine through an API), by rewarding improvement suggestions (consider a whistle-blower/improvement service that can give people rewards proportional to the savings made when they point out waste, abuse or inefficiency) and generally construct open e-governance systems - or independent institutions keeping an eye on them. We can also export transparency by making it part both of foreign aid or by building it into exported technology. Lots of possibilities, plenty of policy positions one can take, but clearly an important area.

September 09, 2009

Stupid arguments against life extension

Practical Ethics: Longer life, more trouble? - I comment on a Times article that claims life extension will make us worse off because 1) it would be bad for society, and 2) because the finiteness of life give it value. These are bad arguments that seem to crop up again and again.

Practical Ethics: Longer life, more trouble? - I comment on a Times article that claims life extension will make us worse off because 1) it would be bad for society, and 2) because the finiteness of life give it value. These are bad arguments that seem to crop up again and again.

Arguing that we shouldn't do X because of bad social consequences hinges on the assumptions that we can accurately predict these social consequences to be bad, that we cannot fix them in a reasonable way and that their badness will always outweigh the good X could do. The assumptions in the case of life extension are very debatable, since we actually do have data about how societies handle longer lifespans and it suggests that they do adapt nicely and become happier. Maybe an extremely rapid shift would be wrenching, but again that would also correspond to a large number of people who would otherwise have died now not dying often horribly. Trying to find some social effects that can outweigh the badness of 100,000 deaths a day is hard, and I have not seen any convincing argument along these lines. Worse, even if the consequences were indeed that bad, then the person making the argument needs to make a convincing argument that people would be unable or unwilling to overcome them. This is obviously problematic when dealing with issues like pensions, employment or overpopulation where societies have indead dealt with them (not always wisely, but that is not enough to save the argument)/

The argument is basically a consequentialist argument, since in a rights ethics we might still have a duty or right to do X no matter what.

The claim

And that is not to mention the obvious ethical problem that, in a democracy, it is impossible to see how successful techniques that arrest ageing could be kept as the property of only those who could pay the requisite price. The more genetic information we gather, the more we need to settle to whom it truly belongs.

is particularly telling. So the problem is that life extension wouldn't be limited to the rich? Either this is a typo and actually the typical "but only the rich will be able to afford it" argument against enhancement, or the author thinks that life extension is so bad that it is a problem that it will leak out to the public, who unwisely would use it.

If it is the first kind of argument, then the reference to democracy shows the internal inconsistency: in liberal democratic societies the voters tend to get what they desire and in market economies prices of technology tends to fall due to technological advance and the incentives to supply more. Life extension is hence unlikely to remain only for the rich unless there are some extreme fundamental costs associated with it (maybe it needs a fully trained doctor on standby all day?) More likely it is going to be expensive at first, then come down in price and soon be regarded as a right in the health care system (and quite likely a cost-saver too, since a slowing of ageing reduces multiple chronic diseases).

The second argument, that life extension is bad but people still desire it, has to be powered either by some psychological claim (like the "death gives life a meaning") or some fundamental ethical claim. The article handwaves the meaning claim, which is clearly problematic since either the length of life matters or merely that it is finite. If length of life matters, then we have empirical data to refute it (happiness studies of long- and short-lived nations and populations). If it is merely finitude, then life extension has clearly no effect since it doesn't solve mortality. Giving an ethical claim that a slowing of ageing is bad is certainly possible, such as a Sandel-like acceptance of the Given or that we have a Kass-like devotion to posteriority, but this is rarely done in general debates - mostly because most such arguments are themselves rather iffy.

It is like the "death gives life meaning" argument. It sounds deep, but nobody would consider "divorce gives love meaning" or "bookworms give libraries meaning" profound or even accurate. The meaning of love is to be found in the deep positive emotions and relations, not in their breakdown or ebb. Similarly life has a meaning based on that it is *lived*, not any boundary condition.

Join the Cloud

I participated in the Cloud Intelligence Symposium at the "Human Nature" Ars Electronica Festival 2009. It was a very fun event that linked rather theoretical considerations by me, Stephen Downes, and Ethan Zuckerman with very real-world applications and activism by Isaac Mao, Hamid Tehrani, Xiao Qiang, Evgeny Morozov, Kristen Taylor, Teddy Ruge, Pablo Flores, Andrés Monroy-Hernández, and Juliana Rotich.

I participated in the Cloud Intelligence Symposium at the "Human Nature" Ars Electronica Festival 2009. It was a very fun event that linked rather theoretical considerations by me, Stephen Downes, and Ethan Zuckerman with very real-world applications and activism by Isaac Mao, Hamid Tehrani, Xiao Qiang, Evgeny Morozov, Kristen Taylor, Teddy Ruge, Pablo Flores, Andrés Monroy-Hernández, and Juliana Rotich.

Audio and video of our talks can be downloaded here. Presentations are appearing on Slideshare. There is a web page for cloud intelligence. During the talks we, the audience and the net twittered continuously (tag #arscloud) - yes, now I am part of the twittering masses too.

Cloud Superintelligence

My slides can be found here. My talk was about the question why groups of people can be so smart (consider ARG solving teams or a market), yet they obviously also can be far less intelligent than they could be (consider national foreign policy, market bubbles etc). Part of it is how the group solves the internal communication problems - better organisation produces greater benefits than just making individuals better, and allows bigger groups. This can be done formally, as with a company, or informally using self-organisation or just shaping by the right medium.

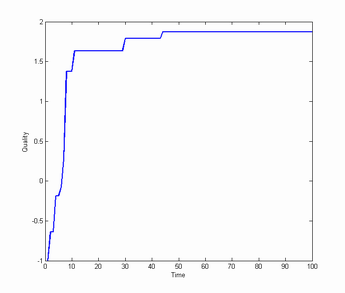

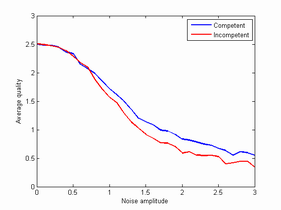

A simple example is how Wikipedia page quality may develop. Imagine that people of random competence (normally distributed) visit a page, and if they find the page to be worse than their competence rewrite it to their level. The effect is a gradual improvement (growing roughly as sqrt(log(n))).

This works even if people have a noisy estimation of their own competence (or the page's quality): even if the noise is two standard deviations there will be almost one deviation improvement in quality after 100 visitors. Adding incompetent agents who don't recognize that their basic skill is below average still doesn't change things much! The system is pretty robustly self-correcting. You don't need many agents to get a big improvement at the start, but you want the other agents to maintain the quality.

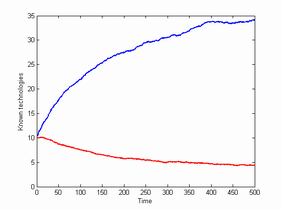

Another reason why big groups are good is that they can maintain more knowledge and competence than small groups. Consider the model where each agent in a group knows three technologies, and agents randomly die and are replaced by new agents who learn new technologies from random elders. If inventions occur at a slow rate, a small population will lose its technologies. Larger populations not only maintain them, they build up a bigger repertoire over time.

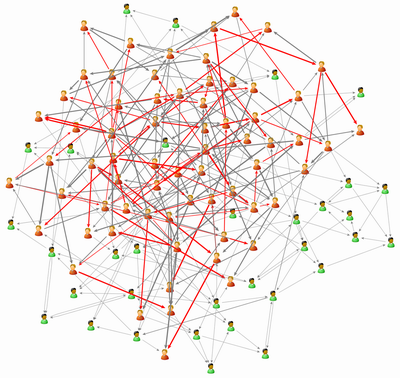

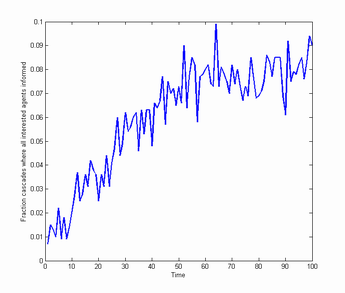

My third model is a model of blogs. Agents read a limited number of information sources and have certain interess. Random agents post stories based on their interests. If one agent that is read has a piece of interesting news, that source is regarded as more useful by the agent reading it. Agents write about the interesting news with a certain probability, creating information cascades. Over time agents randomly shift to other sources if one proves useless (e.g. no interesting news from that agent in 1000 iterations).

It turns out that the agents become better and better at distributing news. At first most agents will be isolated from agents sharing their interests, but over time communities develop. These in turn act as "antennas" helping agents get news even though they have a limited number of inputs - the blogosphere helps overcome attention problems.

Overall, the right kind of organisation can make agents much smarter. It is also possible to augment it by "superknowledge": using big databases like Google or (my favourite) 80 million tiny images: enough data or knowledge can itself substitute for intelligence in certain ways.

My conclusion was that we are already in an era where posthuman superorganisms are running things - we just know them as markets, nations, companies or blogging cliques. However, so far these superorganisms have evolved by trial and error, and often not in the direction of greater intelligence (just consider how cognitive biases are often built into or promoted in institutions for reasons that make sense on the individual human level but make the institution pretty incompetent). With new media and new awareness of distributed cognition we might actually start seeing much smarter cloud intelligences. And we will be in them.