January 23, 2010

The unique chess decade

Gary Kasparov has an interesting essay on the effects of widespread beyond-grandmaster chess software on the chess community.

Gary Kasparov has an interesting essay on the effects of widespread beyond-grandmaster chess software on the chess community.

Here is a quote that caught my eye:

It was my luck (perhaps my bad luck) to be the world chess champion during the critical years in which computers challenged, then surpassed, human chess players. Before 1994 and after 2004 these duels held little interest. The computers quickly went from too weak to too strong. But for a span of ten years these contests were fascinating clashes between the computational power of the machines (and, lest we forget, the human wisdom of their programmers) and the intuition and knowledge of the grandmaster.

This is a nice illustration to Eliezer's "linear singularity" case: even a steady increase of performance may look surprising and sudden if we use a parochial scale where we do not notice the change because it occurs on a scale below the usual human range (see slide 5-6, 20 of his presentaiton at the Singularity Summit 2007).

Another interesting point, about computer-aided chess:

Weak human + machine + better process was superior to a strong computer alone and, more remarkably, superior to a strong human + machine + inferior process.

It is all in the software, it seems.

January 18, 2010

Bark in the bread moves the percolation threshold

How resilient is a society to disruptions? Here is one simple model with some interesting dynamics.

The society is modelled as a network of nodes, where each node gets inputs (information, energy, money, goods etc.) from other nodes. It needs several kinds of input to function, so if it cannot get all of them it will stop working. This can of course cause a cascade as more and more nodes stop working due to shortages.

If each node has k inputs, and the probability that a node is working is p, then the probability that this node has all working inputs is p^k. While the equation p=p^k only has the real solutions p=0 and p=1, discrete networks have subnetworks that can luckily avoid collapse. But they are very small compared to the whole network: this model is very unstable, and any small disruption tends to disrupt the whole system.

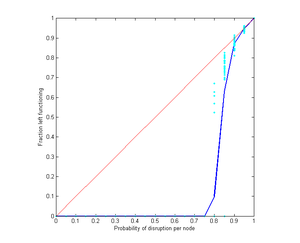

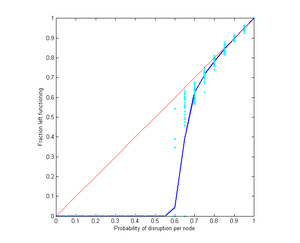

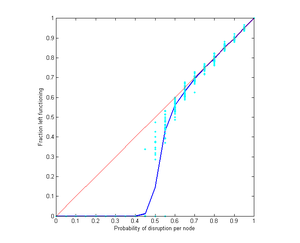

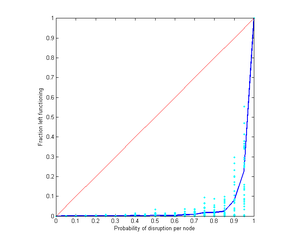

[Erratum: the x-axis has the wrong label below. It really denotes the probability p that a node is *not* disrupted.]

Number of stably functioning nodes when a network with k=1 starts with different fractions of failed nodes. The blue line is the average of individual runs, shown in turquoise. The red line indicates the perfect case, where taking out a certain fraction has no further effect.

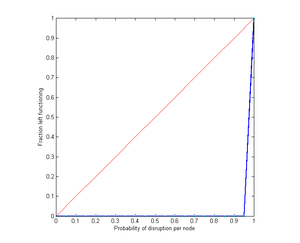

As above, but each node now requires two goods. Even a very small disruption crashes all nodes.

Substitutes

In real societies different goods can substitute for each other. If there is a shortage consumption shifts to an alternative, until all the alternatives are unavailable. This softens the effect of multiple dependence.

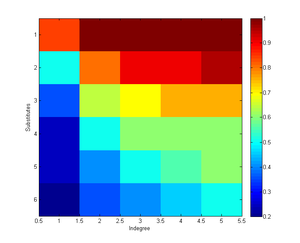

Here are the results of simulating a network with 1000 nodes and different numbers of available substitutes.

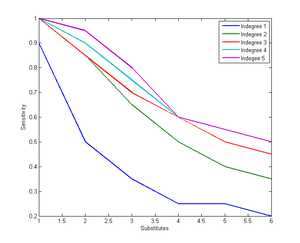

Here is a diagram of sensitivity as a function of number of substitutes and number of inputs:

The colours denote the lowest disruption probability where just a fraction of 0.05 of nodes remain active; warm colours indicate sensitive societies (crash at high p). When there are no substitutes sensitivity is very high. Substitutes rather efficiently counteract the effect of multiple dependencies.

Allowing nodes to have a uniformly distributed in-degree or number of substitutes does not change the outcome much. They were equivalent to a somewhat reduced in-degree or number of substitutes respectively. Even making certain nodes more likely to be the default source of different goods (i.e. node i links to node 1 to i-1) did not have much effect since substitution could circumvent such bottlenecks. Making the substitute goods equally skewed surprisingly did not worsen the situation much, and instead tended to soften the percolation transition.

One conclusion seems to be that if we want to increase the resiliency of our society we should work on increasing substitutability. Devices and software should be able to use alternative infrastructures. Knowledge of what can be substituted for what should be disseminated (so no time is lost when disaster strikes in trying to figure it out). This is particularly true in areas where many different kinds of inputs are needed.

An IgNobel prize contender?

Here is a lovely little paper titled [0912.3967] Road planning with slime mould: If Physarum built motorways it would route M6/M74 through Newcastle. The authors use slime moulds to find optimal road networks:

Here is a lovely little paper titled [0912.3967] Road planning with slime mould: If Physarum built motorways it would route M6/M74 through Newcastle. The authors use slime moulds to find optimal road networks:

We consider the ten most populated urban areas in United Kingdom and study what would be an optimal layout of transport links between these urban areas from the "plasmodium's point of view". We represent geographical locations of urban areas by oat flakes, inoculate the plasmodium in Greater London area and analyse the plasmodium's foraging behaviour.

They found that the slime mould was pretty accurate in predicting the real motorway network, with the exception of the M6/M74.

Mould intelligence is not that stupid as a concept. We might not want to use real slime moulds to solve optimization, but the distributed particle model seems to have some promise.

January 12, 2010

The Coalition of the End-of-the-World Unwilling

[0912.5480] The Black Hole Case: The Injunction Against the End of the World by Eric E. Johnson is a paper on the legal problems of handling existential risk and radical uncertainty. I like it because it cites our paper - Johnson has even read and understood it! Less can be said about the bloggers who misunderstood his paper and think he is suggesting the US should bomb Switzerland...

[0912.5480] The Black Hole Case: The Injunction Against the End of the World by Eric E. Johnson is a paper on the legal problems of handling existential risk and radical uncertainty. I like it because it cites our paper - Johnson has even read and understood it! Less can be said about the bloggers who misunderstood his paper and think he is suggesting the US should bomb Switzerland...

The real chain of confusion seems to be Johnson's paper -> Business Week -> democrat bloggers. The typical result of not really reading the sources but assuming one knows exactly what the previous source is talking about (and that they also have read the sources).

Actually, taking military action against somebody doing potentially world-endangering activities doesn't seem that wrong. We have just war theory, we accept intervening for humanitarian reasons (hard to get more humanitarian than trying to save all humans) and there have been wars and interventions to prevent development of merely GCR-level technology (Israel vs. Syrian and Iraqui reactors).

But handling radical uncertainty may be problematic legally and politically, as demonstrated by the recrimations over the Iraq WMDs. If Elbonia is trying to develop a Heim-theory reactor that would be a terrible threat only if a fringe physics theory was true, should the international community intervene? What if the sultan of Foobaria was seriously trying to summon Cthulhu?

It seems that sensibly allowing that our theories could be wrong puts a bit of probability mass on extreme and odd possibilities, and multiplied by the value of human extinction (which is at least as bad as 7 billion individual deaths, possibly much worse) this could become rather hefty. You might estimate that the chance that Heim theory is right may be one in 10,000 and the Elbonian project will have a 1/1000 chance of blowing up in this case. That makes the total badness at least 700 dead people. But these people are statistical people rather than real people, and the whole analysis hinges on very suspect priors. So we should expect both a lot of biases to come into play (new, unusual risks, low level of controllability, low probabilities, fictional example bias, etc) and people to diverge on their estimates - if they even take the issue seriously, given the anti-silliness bias and attention conservation we all have. We might agree that Elbonia looks more dangerous than Foobaria because most of us think elder gods are less likely than bad physics experiments, but we shouldn't trust that judgement much.

These biases are a valid reason to be sceptical of military interventions against low-probability existential threats. The question is whether the world community would be willing to intervene against a high-probability threat? At some point the probability would be high enough that some nation would unilaterally regard the threat as big enough to intervene despite the diplomatic costs. But below that there would be a region where the community would have reason to act as a group. Could we set up functioning legal norms for this?

They probably just watched the YouTube clip

The UK Home Office clearly lacks any knowledge of history: why to use ID cards (flash). Wow... this is a monumental self-goal. This is exactly why anonymity is sometimes important.

The UK Home Office clearly lacks any knowledge of history: why to use ID cards (flash). Wow... this is a monumental self-goal. This is exactly why anonymity is sometimes important.

I don't mind having an ID number and ID card as long as I trust my government to be trustworthy and competent. But very few governments manage to reach that level, and they may not remain at that level. Being able to discard identities (even at some cost) is important.

(via Samizdata)