April 30, 2004

What to do about the universe?

Slashdot mentioned the paper Universal Limits on Computation by Lawrence M. Krauss and Glenn D. Starkman. They demonstrate that in a de Sitter spacetime (which the universe will approximate if dark energy is dominant) the amount of energy that can be scooped up and used for computation by any civilization is finite.

It is a nice example of clearly reasoned physical eschatology, even if the conclusion is a bit depressing. But then again, it is hard to get the fire-and-thunder drama of Tiplerian omega points in an open universe in the first place, and rapidly expanding open universes are especially nasty. We need to fix it.

The basic result of the paper seems very robust. In a de Sitter spacetime there is a horizon beyond which no information or matter can reach an observer. Hence the amount of computation a finite-sized system can achieve is finite, even given infinite time. They show that you can get 3.5e67 J by spreading replicating von Neumann probes converting rest mass into energy and sending it back to their origin, roughly the total energy of baryonic matter within today's horizon. They also show that distributed processing achieves 1/6 less information gain than having it all at the origin (and this assumes no energy losses due to transmission).

The real killer is the hbar H/2 pi k_B Hawking radiation from the horizon, since it is constant. This makes the cost of erasing one bit of information (necessary for error correction even if reversible computation is used otherwise) constant, and the total amount of information processing finite. If the universe got cooler fast enough the amount of information processed could diverge.

So we need to either find a way of cooling off parts of the universe so that we can do more computation, change the spacetime topology to get rid of horizons or construct a more liveable baby universe.

Cooling requires a heath bath colder than the horizon Hawking radiation. A neat idea suggested by Wei Dai is to use big black holes. But to get colder than the horizon, we need holes with radii larger than it, so that scheme won't work. To my knowledge there are no other suitable heat baths, although maybe one could pump entropy from baryonic matter into weakly interacting particles like neutrinos (neutrino cooling). Unfortunately they do interact weakly (duh!), so in the long run both heat reservoirs will tend to equilibrate. But as a short run or local solution it might work well.

Changing spactime topology seems to be hard, since any action done to remove the horizon has to reach it, but the accelerating expansion of the universe makes it recede. There are likely other, deeper. reasons why they can't be removed like the Penrose inequalities and other global constraints.

That leaves building a new universe. The Krauss and Starkman paper gives us how much mass-energy we could in principle gather together. It is obvious that we could just pile it up into a big black hole (which, according to some models such as the Linde-Smolin model, might spawn a baby universe or more), but it might be possible to convert it to something more useful. Especially if inflation holds it ought to be possible to cause the formation of a new inflationary domain, which would hopefully be more hospitable. Energy-wise it seems plausible that 1e67 J could be used to drive the energy density to the Planck level in a fairly large region enabling inflation to kick in. The key problem here might be that the new domain receedes too fast from the current spacetime for us to follow, and of course (as noted by Tipler) that the amount of information we can squeeze into the "wormhole" into it is finite. However, when using it as an escape hatch to an universe with unbounded future this might be acceptable. A more pressing and interesting problem is whether it is possible to do chaos control on chaotic inflation to achieve the right symmetry breaking for pleasant physics and the right kind of vacuum energy.

April 25, 2004

Taking a Cat Map

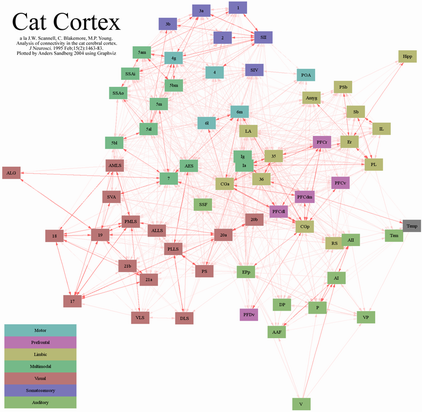

A bit of visualizing entertainment: plotting the cat cortex connectivity data of JW Scannell, C Blakemore and MP Young, Analysis of connectivity in the cat cerebral cortex, Journal of Neuroscience, Vol 15, 1463-1483.

Again, I used Graphviz, coloring connections (this time directional) according to anatomical strength, and areas by their function (guessing a few, like the retrosplenial cortex that really looks very limbic).

The result is rather nice. One can see the starfish-like structure of sensory hierarchies merging in a transmodal middle discussed in Marcel Mesulam, From Sensation to Cognition, Brain (1998) 121, 1013-1052. Area 35/36 is perirhinal cortex, Ig/Ia insula, CGa/CGp cingulum, 6l/6m premotor cortex and they are heavily connected to the prefrontal cortices. Note the low-level interconnections between the somatosensory and motor systems and the medial temporal lobe memory system at the upper right.

I'm somewhat uncertain of some of the areas. Anybody who can tell me what PFDv, A6L, T, V and Temp are? I left out A6L (visual?) and T (limbic?), guessed that PFDv is dorsal prefrontal cortex, that V is something auditory and that Temp is all of the MTL.

Graphs like this should be taken with a grain of salt - some connections might have been missed or never even studied (who looks for a direct visual to motor link?), and even a few axons might have a huge influence functionally. The information we gain from them is a vision of the overall structure of an adaptive agent: hierarchies extracting regularities from the sensory input, using feedback to constrain or search for details. More decorrelated and distributed representations are combined into multimodal patterns, where they are linked to the biological and emotional state of the agent. This central state interacts with the behavior selection system that can activate motor output, as well as memory systems storing or retrieving older states. But there are plenty of cross-connections enabling quick responses and adaptive tricks like automatic attention direction to changing stimuli or fast emotional responses to fearful stimuli.

It would be neat to get the same atlas for the human brain. But that is still some way off, given how tricky it is to determine connectivity (we have CoCoMac for the Macaque). Not to mention the subcortical connectivity. Scannell has a paper with the thalamic connections, but they are not that fun - I want the rest of the subcortical menagerie too!

April 22, 2004

Big Brother and His Little Siblings

Pro et Contra is a series of public debates on technology an ethics at KTH. Today's subject was surveillance and society, framed in terms of Orwell's 1984 and with among others the national police commissioner Sten Heckscher, philosophy professor Sven-Ove Hansson and two of his grad students

and IT-strategist Pär Ström. It was a lovely example of Swedish conflict avoidance. The philosophers avoided being too normative, suggesting frameworks for judging surveillance as better and worse - not acceptable or unacceptable. Pär warned that we were entering into a 1984 world, but did little to suggest that anything could be done while Sten pointed out that intrustions into personal integrity might be necessary for the rule of law and legal security.

In the end, I was left with the impression that it was Sten who got to be normative because he was running things - a typical result when philosophers and debaters abandon normativity in the interest of preserving a cozy atmosphere and avoiding having to take a firm stand on complex and troubling questions.

Some of my thoughts:

The basic framing was in terms of Orwell's 1984, Boye's , Kallocain, Huxley's Brave New World and Bradbury's Fahrenheit 451: the canon of classic dystopias. It showed in the style of debate: the threat was always seen as the centralized information gathering powers of government, corporations and media, and the remedies always in the form of either laws and regulations, or technology. Very similar to the debates held around 1980 in Sweden as the integrity risks of computer databases were realized. But where were the new visions? David Brin, Bruce Sterling and Vernor Vinge have described new, potentially far

more worrysome aspects of ubiquitious surveillance. But these modern visions were alien to the debate.

The core assumption that is hard to shake is that power is scalar, something that can be measured along a simple scale of powerlessness to ultimate rulership, and that surveillance ability follows this scale. At most it is possible to recognize that there exists multiple independent groups exerting power, like the government, the corporations and the media (with internal hierarchies and divisions). They are the wardens watching from the panopticon one-way mirror central tower.

But the prisoners are all watching back. They might not see exactly what is going on in the tower, but the wardens cannot even open a window without allowing somebody to see some of what they are doing. They are getting constrained by the immense observing power of the public.

We are also seeing the emergence of micro powers. Pär showed a sociograph based on traffic analysis - I have done similar graphs as a hobby. With some training anybody can do data mining and public information intelligence gathering that can pick up sensitive information. Fans use the net to track celebrities collaboratively. Blogs act as distributed journalism. Wireless cameras are selling well on the net and political groups build maps of their opposition. That most people do not use these tools is of little matter; the point is that the entrance costs of becoming a surveillance power is decreasing and more and more groups are doing it in one way or another.

The debate is usually framed in terms of protecting the privacy/integrity of people while achieving other social goods like justice, freedom of contract between employers and employees, openness etc. But this balance is assumed to be set by a central decision. While the surveillance powers of the police and employers can be regulated in this way, it is far harder to achieve it among a heterogenous, international and diffuse mass of mini-powers that rapidly adapt to changing technology. It is of course possible to ban the use of private monitoring, but if the ways monitoring are done change fast enough and are highly diverse the ban becomes unenforceable. In the long run, if nothing changed, a dynamic balance would likely emerge with the right amount of social norms, regulations and leeway for a working society with advanced surveillance. But as things are changing fast this balance will not appear. Worse, rapidly introducing regulations in a panic against the changes tend to freeze current ideas and imbalances in place in slowly adapting rules that may rapidly become irrelevant and undermine legal security.

Perhaps one way of handling this would be technological adaptations. It was suggested that technology should be designed to minimize electronic traces on the net. But that assumes that all software is written according to centralized specifications and not evolution - the quick hack I throw together and later is spread among my friends (and perhaps later incorporated into other systems if it is useful) might introduce a new form of electronic traces.

Pär envisioned a 1-10 scale between anarchy and police state, suggesting that reasonable people would seek to stay somewhere in the middle (with some serious spread on exactly where). His claim was that new technology had pushed us towards the police state side. I disagree. New technology has enabled us to go in both directions, quite possibly simultaneously! It is not impossible to envision an anti-terrorism police state that is constantly harassed by citizens spying on each other, institutions and the government. Technology enables things, it very seldom forces particular cultures and societies.

One can add another dimension to his scale, a transparency dimension: in an opaque society it is hard to get information about others. Powerful groups are better at it, but they still have to work hard to do so. In a transparent society information leaks strongly. Again, powerful groups are better at gathering it, but they have a multitude of little brothers picking up pices all over the place and quite possibly putting them together. In this 2D diagram we get four extremes: opaque anarchy, opaque police states, transparent anarchy and transparent police states.

Leaving the power distribution issue aside, do we have any choice in the level of transparency? It is here where I think the debaters ignored David Brin's transparent society at their own peril (if they knew about the book). His main claim, which I agree with, is that new technology enables far more transparency than opaqueness, and given the human desire of more information about others (and the competitive advantages of such information) we will tend to move towards more transparency. The problems of controlling surveillance when it becomes cheap and technologically easy in a heterogenous society prevent most attempts of reining it in.

Brin's insight was that if we are to live in a more transparent society, we better make sure it is a good society. Open societies require accountability besides transparency and freedom of expression. As long as the debate is framed in terms of how to limit and control surveillance rather than how to ensure that it and the gained information are used accountably it will not produce any gains in democracy. Even a fairly repressive society can create strict regulations for surveillance - more regulation is good news for regulators, administrators and monitors, and not necessarily for the citizens (especially since they are likely to be more regulated than authorities). A society that seeks to construct means of accountability creates feedback loops that help limit abuses and instill the dynamical balance of society and surveillance faster.

Feedback loops and the Little Siblings are a better defense against Big Brother than asking Big Sister to keep an eye on him. She might be good at it, and we might trust her, but she cannot be everywhere all the time.

April 17, 2004

Maps of the academic neighbourhood

I played around with the bibliography file of my research group as a bit of exercise for our course on complex networks. Here are some results, perhaps more interesting as visualisation exercises than for their actual content, unless you happen to be in the SANS group of course.

A graph showing co-authorship of papers (the small ellipses). The fontsizes of the people boxes are proportional to the log of the number of published papers (to avoid professor Lansner swamping us all), the paleness the time since last publication and the color of the articles their age (red 2004, blue sometime in the 1980's).

It is worth noting how much Sten Grillner's research group at the Nobel Institute for Neurophysiology is mixed up with the Sans group; there is a long-standing collaboration on lamprey locomotion here. It is also interesting to see how the clouds of papers to the left indicate the time certain lines of research and researchers were active.

Here is the pure co-authorship graph, where people who have written paper together are joined. The color of the link shows the number of papers.

Note how one can see different external research groups (like Mike Hasselmo's at the top) and internal research topics (the ANN side of Sans to the right, the more biological neural network projects along the left).

These images were made by exporting from Endnote into the Refer format, which is easily parsed. I used Matlab (as always :-) to produce a file readable by Graphviz. Matlab might not be the best string handling language around, but it is good for taking statistics and making interesting color calculations. Neato, the graphviz layout program, turned out to have numerical stability problems when I used longer edges; it might have to do with the density of these graphs.

Finally I tried a 3D layout using my own graph layout program Legba:

Here people were constrained to the x-y plane, while papers were constrained to fixed x-positions depending on age. It looks much better in 3D of course, although the coloring is admittedly an eyesore. An interesting effect of this visualization is that one can not just see topic clusters but when different people were active.

April 10, 2004

Exporting the Swedish Snus Model

I visited Brussels this week, to participate in a meeting about snus in the Hayek Series organized by Tech Central Station.

Snus?! What does moist oral snuff have to do with Hayek, or Brussels for that matter?

The basic problem with tobacco is that it is addictive and unhealthy. This is true for all forms, be they cigarettes, snuff or sweets. But smoking the stuff makes things worse, since it produces hundreds of carcinogenic and teratogenic chemicals which are directly breathed into the lungs - a recipe for lung cancer, and over 90% of all lung cancers are linked to smoking. Taking tobacco orally avoids the lung cancer issue (oral cancer is still a problem, but you get it from smoking too). This is very visible in the cancer statistics of Sweden, where snus is widely used among men: a significantly lower incidence of lung cancer than in the rest of Europe but only among men; for women the incidence is about equal. See the report on oral tobacco by the European Network for Smoking Prevention for a good review of the field.

So, if people took snus instead of smoked like in Sweden hundreds of thousands of lung cancer cases could be avoided every year in Europe. They would still have the addiction problem and other health problems, but it would at least be a better situation.

But snus is banned in all of EU except Sweden.

This has produced the current interesting situation. Many tobacco epidemologists have realized that harm reduction is a good thing: while the long-term goal is to get people stop using tobacco as much as possible, in the shorter term it would be healthier if they at least could switch to smokeless tobacco (there are also obvious benefits in terms of passive smoking and lowered fire risks). Thus they have become allied with parts of the tobacco industry (especially Swedish Match, the major snus producer) to have the ban repealed.

So who is fighting for it? The rest of the anti-tobacco movement, with the Swedish anti-tobacco establishment at the front. This has produced the bizarre situation that Sweden is generally viewed as a demonstration that harm reduction through snus is feasible, but most Swedish tobacco researchers are fiercely opposed to harm reduction. The typical Swedish approach to public health is very much to ban everything potentially unhealthy and not accept any compromises even if they would be helpful. Add to this that is is very easy to claim that anybody suggesting harm reduction has been bought by Big Tobacco or is secretly one of those satanic drug liberals who think the government shouldn't interfere in what people do with their body chemistry, and you have a recipe for stormy and polarized debates.

But the meeting in Brussels was surprisingly pleasant. Dr. Michael Kunze", professor of public health in and director of the Nicotine Institute in Vienna presented the case for harm reduction. Opposing him was Dr. Göran Boëthius from Doctors Against Tobacco, agreeing on the basic science but taking the typical Swedish line that it is better to try to reduce tobacco use than to get people to switch to something safer in the meantime. Mr. Paul Flynn, MP and Vice Chair of the UK House of Commons Drugs Misuse Group took the general pro-snus position. Nobody on the podium wanted more people to use tobacco, but it was clear that the debate has moved far beyond the Swedish internal debate. Even the report mentioned above from ENSP showed a depth that is rarely seen here. As Dr. Kunze remarked, it was refreshing not to be shouted off the stage as a heretic any more.

It remains to be seen if this thawing of the debate climate will have any effect on the EU ban. But it seems absurd to ban a form of tobacco that is slightly safer than other, legal, forms while still allowing the sale of dangerous things like chewing tobacco. Not to mention subsidizing tobacco farming at the same time (although that waste of money might be ending).

But the resistance to harm reduction exists. In Sweden much drug policy has been built on an implicit model of drug use as an epidemic: users "infect" others by acting as bad examples and the mere showing of drugs can entice people to try them - and then they are irrevocably stuck in use. Any lessening in the war on drugs would be risking having even more people exposed. That this model is not well supported by evidence doesn't change things, since it is promulgated not among scientists but rather among politicians and administrators. Even experiments with giving heroin addicts metadone have been fiercely resisted, and I doubt harm reduction will become even a thinkable thought in Swedish policy circles for many years. Hopefully they can be infected by seeing it implemented out there in Europe.

My own contribution to the discussion was merely to point out why learning theory predicts that people would be less likely to become addicted to snus than to cigarettes, but why it is fairly easy to move to snus from the poison sticks. The amount of nicotine that appears in the blood is roughly the same for both, but the rise time is much faster for smoking. This makes the "credit assignment problem" of learning systems easier - making the association between the action (smoking) and the reinforcement becomes much easier even with a small decrease in the time. The brain learns more from time differences than absolute values. The maintenance of the addiction is then caused by the total amount of nicotine (which causes the cholinergic systems to make more nicotinergic receptors). Nicotine is a learning enhancer, and this is usually the problem. It would be nice to have a less addictive learning enhancer with as well documented effect. Here is by the way the Hayek connection: addiction is simply mis-learning. We create spontaneous orders in our brains that are self-destructive.

Dr Kunze pointed out that most nicotine replacement therapies doesn't work unless they are heavily supervised. Maybe a nicotine vaccine could help, but the ethical issues involved are problematic (it can only be tested on addicts, but would likely work best on non-users - and be a terrible temptation to government busybodies seeking to limit unhealthy behavior). But it is clear that we need new approaches to get out of addiction. Personally I believe a better understanding of the neurochemistry of memory and conditioning will enable us to use medicines to treat addiction within a not too far future. There already exist some tentative experimental drugs that lessen the risk of relapses by acting on the amygdala pathways.

During the lunch afterwards I entertained happily shocked participants with explanations of how snus is actually used. It made me realize that I do indeed come from a northern barbaric country.

Are renegade biologists the majority?

Review of Genometry (Ace Science Fiction, edited by Jack Dann and Gardner Dozois).

How many renegade biologists are there? If Genometry is to be believed, about half of all biologists must be ready to escape their labs to unleash chaos and transformation on the world. Of the 11 stories in the collection, 6 involve a renegade biologist in one way of another.

(some slight spoilers below)

Why? Because we fear what is not under control, and biology is experienced as being less controlled than anything – it is our messy bodies, the indomitable forces of nature. There is a common assumption that nature will always triumph over any kind of planning, a deep doubt sprung from long experience and romantic faith.

Biotech is also in the hands of people we do not know or have no reason to trust. If biotechnology had been a common activity like gardening we would likely not fear it as much, even if there were a few outbreaks of nastiness every year thanks to absent-minded little old ladies or malicious kids. Just like we accept driving around in the deathtraps called cars but worry about flying.

Together they produce the renegade biologist image. Unlike the mad scientist of old, they are often driven by idealistic visions. The biologists of the “The Invisible Country” (Paul McAuley), “The Kindly Isle” (Frederick Pohl) and “Chaff” (Greg Egan) all refused to work for the system and for aims they considered unethical, and instead escaped to spread their own brand of salvation. The never-seen culprits in “The Pipes of Pan” (Brian Sableford) could also plausibly be driven by some idealistic vision to upset a social order they find suffocating.

To some extent this is just a clever plot twist; the renegade turns out to be the good guy. It can still provide the frission of ethical doubt about their actions and the sense of wonder of the resulting transformations. But it is also a sign that science fiction authors are less interested in dystopian uses of genetics than they used to be. It is a trope that has been overused and entered common consciousness to the extent that it is uninteresting. It is far more interesting to turn it to good uses, that become surprising. Of the 11 stories four show genetic modifications of humans as having at least some potentially good uses, while only three focus on the downside. The rest are somewhere in-between, in the confusing landscape where many possibilities open up and humanity has to figure out how to enfold them in culture.

”Written in blood” (Chris Lawson) is IMHO the best story in the collection. A wonderful little package of religion, tolerance and biology with a personal approach (my only issue is whether the code used isn’t a translation too). It shows how technology can be adapted to culture, but also how culture is affected by the knowledge and possibilities given by technology. Unlike the other stories the real punch comes from the simplicity of the technology – no viral plagues, no radical re-engineering. It is doable today.

The renegade biologist will become a tired trope just as the mad scientists soon. As we see more biotechnology close up, we will start to recognize that it is not something controlled solely by who knows the science and holds the test tube, but by a complex interplay between many different interests. There is no need for renegade individuals when there are motivations as strange, beautiful and horrible among corporations, governments and little old ladies.