June 26, 2014

Ethics of brain emulations

My paper about brain emulation ethics is now officially out, and open access:

My paper about brain emulation ethics is now officially out, and open access:

In the light of uncertainty about whether emulations are conscious or not, we should be careful with how we treat animal models - assume they may have the same mental states as the original, if you have included most of their brains. OpenWorm might not have much of a problem yet, but when we get to OpenMouse we better have virtual painkillers.

June 20, 2014

What's Love Got to do With Medicalization?

In the (so far) final installment in the long series of papers about the neuroscience of love with Julian and Brian we have arrived at Brian D Earp, Anders Sandberg, Julian Savulescu. The medicalization of love. Cambridge quarterly of healthcare ethics. 06/2014; DOI:10.1017/S0963180114000206

In the (so far) final installment in the long series of papers about the neuroscience of love with Julian and Brian we have arrived at Brian D Earp, Anders Sandberg, Julian Savulescu. The medicalization of love. Cambridge quarterly of healthcare ethics. 06/2014; DOI:10.1017/S0963180114000206

It is open peer commentary, so interested scholars might want to submit replies.

This paper responds to the perennial worry "But doesn't this diminish love?!" that always shows up when discussing the neuroscience and neuroenhancement of love. In what ways are medicalization bad? There are a few key worries:

- The Pathologization of Everything: turning everything into some illness.

- The Expansion of Medical Social Control: control over our lives is handed over to medical experts.

- The Narrow Focus on Individuals Rather than Social Context: looking at individuals rather than overarching social problems.

- Narrow Focus on the Biological (or Neurochemical) Rather than the Psychological: bio-reductionism looking at the mechanistic underpinnings of rather complex phenomena and states.

- The Threat to Authenticity and the Undermining of the “True Self": maybe we are getting separated from unmediated experience and how the world really is.

- Finally, maybe even understanding how love works undermines our experience?

Somewhat unsurprisingly, we do not think these worries are enough to refrain from the kind of love enhancement research we suggest would be good. Rather, each of these worries do represent a recognized problem, but the solution to the problem is rarely to avoid developing the science. These are social and cultural problems, and hence best met on the social and cultural level.

There is nothing inherent in science and medicine that automatically leads to the worried about outcomes; rather, assuming that the science inexorably leads there means abdicating responsibility for what happens in our culture of mind, leaving only technocratic choices about what research to do or not - hardly the conclusion most intellectuals actually believe in. Maybe they believe influencing technology is easier than influencing culture, but that is empirically rather doubtful and philosophically inconsistent: technology is a part of culture.

The final worry, that understanding is bad for wonder and true enjoyment, seems to be based on a misunderstanding or maybe an obscurantist view of reality. Maybe some people enjoy things more if they do not understand them, but we also know there are many people who find a fuller enjoyment when they also intellectually grasp what they are caught up in.

June 14, 2014

Two chapters from the future

Two author's preprints of chapters I have in upcoming books (buy the books! tell your friends! cite the chapters! :-) )

Two author's preprints of chapters I have in upcoming books (buy the books! tell your friends! cite the chapters! :-) )

Smarter policymaking through improved collective cognition? from Anticipating 2025: A guide to the radical scenarios that lie ahead, whether or not we’re ready for them. Ed. David W. Wood. London Futurists. 2014

Transhumanism and the Meaning of Life from Transhumanism and Religion: Moving into an Unknown Future, eds. Tracy Trothen and Calvin Mercer, Praeger 2014.

Both are slightly different from the final version, so if you care about fine details, typography or proper error checking you have to wait for the books.

June 12, 2014

Cabbage, kings, the Turing Test and good books

I talk about the Turing Test, why the Eliza effect, deep learning plus reinforcement learning, and the future of AI:

Apropos freeform interviews and discussions, here is a podcast I did with some friends about science fiction, social software, black holes and other things.

June 10, 2014

Chatting about the Turing test

Eugene the Turing test-beating teenbot reveals more about humans than computers - I blog at The Conversation about the recent 'success' in beating the Turing test.

Eugene the Turing test-beating teenbot reveals more about humans than computers - I blog at The Conversation about the recent 'success' in beating the Turing test.

Actually running the Turing test is a bit like putting cats in boxes or threatening people with runaway trolleys in order to learn more about quantum mechanics or ethics. It was intended as a thought experiment, not as a test. Riva Tez pointed me at what Turing said: “[A] statistical survey such as a Gallup poll [would be] absurd [as a way to define or determine whether a machine can think]” (Turing 1950).

However, running experimental psychology trolley problems have demonstrated odd things about how we think about ethics. Running the Turing test has shown us the Eliza effect: we are very bad at *not* ascribing minds to entities we converse with, even if they are decidedly stupid. Making software that interacts well with humans in a social setting is useful and nontrivial. So running Turing tests is not per se a waste of time, just not a way of measuring AI progress.

June 08, 2014

Do we have to be good to set things right?

I have been somewhat mystified by why some people in my libertarian network have become climate denialists. After all, they are pro-science and generally critical thinkers. Heck, I have often expressed at least climate sceptic sentiments. But yesterday Janet Radcliffe-Richards gave an off the cuff gave an explanation for climate denialism during the Q&A after a talk:

I have been somewhat mystified by why some people in my libertarian network have become climate denialists. After all, they are pro-science and generally critical thinkers. Heck, I have often expressed at least climate sceptic sentiments. But yesterday Janet Radcliffe-Richards gave an off the cuff gave an explanation for climate denialism during the Q&A after a talk:

"We have not being doing anything [morally] wrong, hence climate change cannot happen."

Expressed like that it sounds silly. But she argued in her talk (which built on her 2012 Uehiro lectures) that there is a particular, widespread mode of thinking going on here.

The natural order

According to this account there is a natural order which is not just how things are, but intrinsically also a moral order of how good or meritorious different things are. Consider the traditional Aristotelian-Christian worldview with a fallen Earth in the centre of the universe, surrounded by concentric spheres of increasing purity; with all objects, plants, animals, humans and angels forming a Great Chain of Being going towards physical *and* moral perfection.

This natural order view is a metaphysical presupposition that make people look for whose fault something bad is: badness has a moral cause, not just a physical cause. And of course, breaking the natural order is also a transgression against the moral order. The naturalistic fallacy ("natural is good") is however only part of it: good/evil is causally active in what consequences ensue. It is a just world hypothesis applied to everything. This leads to a very problematic assumption: "We have been bad, and we must be good to put things right". This is the error of thinking moral duty is causally responsible for good outcomes.

The Copernican and Darwinian revolutions were upsetting because they ruined this certain and neat order. They separated fact and value absolutely. Suddenly there was no plan - nothing that can be interfered with. No balance of nature. No reason to expect harmony. No reason to expect anything to go well. And not our fault if things go wrong. As Janet put it, most of the world is just a mess and not because of morally bad individuals.

But these revolutions have not truly percolated into our mindset, and many of us still run on parts or fragments of the natural order worldview.

I did nothing wrong, so nothing happened

So when confronted with climate change, I think some of us perform roughly the following reasoning: if I gain possession of something through legitimate means, it is legitimately mine. The same is true for wealth of our society: it has largely come into being through honest work and trade, and hence we are legitimately wealthy. If this is a legitimate process then it cannot have any bad moral or practical consequences. Hence climate change cannot be a problem.

The metaphysical mistake here is of course to assume that morally legitimate actions do not have bad effects.

Of course, this undercutting debunking doesn't mean all forms of climate scepticism are erroneous, just that there is loads of motivated cognition going on. In fact, it cuts the other way too: the view that we have been doing something immoral (consumerism, capitalism, rejection of traditional values, whatever) and hence should and will be punished is equally wrong. Climate change will mainly hurt the poor people who did not historically burn fossil fuel, and really workable interventions are unlikely to be austerity programs or assign blame to people who are major causes. One can and should criticise proposals for fixing the climate on empirical and moral grounds: a lot of them are no good.

One could argue that externalities like pollution have not been borne by the people polluting, and this makes their gains illegitimate. But in order to accept this a person who thinks he has acted legitimately has to overcome the "moral = safe" intuition. If you start out by already thinking what you do might be morally suspect, then you are already open to the possibilities that consequences will be morally or practically bad. But few people are that open about their own core values.

My arguments get knocked down, but I get up again

The natural order argument shows up in all sorts of places, from anti-GMO and anti-vaccination views to conservative/reactionary arguments that the old order is the right one. It is often motivating an unease about changes, but then other arguments used to defend the view: when those arguments are knocked down, it does not change the mind of the proposer since they are not the causes of their belief.

The associated fact/value confusion also shows up in defending many beliefs: because the motivation of developing the belief was a moral one, arguments against the belief implies that the critic is driven by immoral motivations (or even that arguing against it is immoral). Janet used some forms of political correctness as an example in her lecture series, where badly supported views used to support high-minded ideals are defended fiercely from critics, who are denounced as being against the ideals since they critique the views. If you are sceptical of the harms of pornography you must be in favour of the subjugation of women. In the climate debate we see similar polarization: some people imagine their opponents must have been bought by the Koch brothers/the climate bureaucratic complex in order to argue as they do, rather than assume they actually hold the views they hold for normal reasons.

Most readers will no doubt feel that they are too clever to fall into this mess of confusion: it is their opponents who are mixing up facts and values! But unless you have observable objective reasons to think the other side is thinking very differently from your side, with different biases and metaphysical confusions, the best hypothesis is that you are as fallible as them.

As long as we think we have to be good to set the world to order we will either be paralysed by utopianism (nothing cannot be done until everybody is united in niceness), assume that what we do to fix things is the right thing and must not be criticised, or that we should be fatalistic about the threats and problems since they are the way things are. Neither is helpful.

June 06, 2014

Daemon and Freedom(tm): superintelligence is more interesting than utopia

I just finished Daemon and Freedom(tm) by Daniel Suarez. Daemon was brilliant, Freedom(tm) too earnest and closed to work.

I just finished Daemon and Freedom(tm) by Daniel Suarez. Daemon was brilliant, Freedom(tm) too earnest and closed to work.

Daemon starts out as a technological murder mystery and soon evolves into a fun technothriller. What I enjoyed was the realistic descriptions of hacks: most are plausible given current technology, at least well into the later stages. The core conceit is one of those ideas that could work in the real world to some extent, but not to the extent it does... at least not without superintelligence.

That was actually what really sold the story to me. In my work I often deal with issues of artificial intelligence risk, and two of the more common stupid objections to it is the assumption that (1) software isn't dangerous, and (2) just being smart is not dangerous. Showing how software can manipulate the "real" world to achieve non-trivial and dangerous ends is useful: Daemon does a good job of showing how current systems of work-orders, outsourcing and net-connected devices could be used. Similarly, the overall plot is to a large extent predicated on a very smart player behind the scenes. As written, it is somewhat unrealistic (but the one acceptable break from reality of the story). But if one instead plugs in the forms of superintelligence we study and allows for more adaptivity than the Daemon is claimed to have, then novel works as a great intuition pump for just how dangerous a very smart software system could be - even in the physical world.

I read science fiction mainly for ideas, so the above motivation is enough for me to like the novel. That the characters are fairly flat comes with the thriller territory and that the climax involves some implausible weapons (the razorbacks, really?) is OK.

Freedom(tm) however suffers from a double affliction of utopianism. The first problem with utopianism is that when you describe a society you really like it becomes hard to allow for any flaws or weaknesses in it. The society becomes a Mary Sue: anybody who disagrees is either misguided or evil, obvious flaws are ignored, and there is no interest in even looking for complications or problems. The darknet society described in the novel is eco-friendly, tolerant, creative... if there is a positive adjective it got it. The fact that the people involved are drawn from the same stock as the normal sheeple often depicted as passive victims/collaborators in the novel is glossed over. Despite the fact that plausibly there would be some rather deep ideological and emotional rifts going on everybody except one member behave themselves.

The second kind of utopianism is the techno-utopian assumption that technology works. I am fine with novels where advanced technology does amazing things: the problem is when it always works. We know from real life that technologies have flaws and sometimes fail because of unpredictable or outside influences. For most novels it does not matter greatly if phone calls occasionally are interrupted and the radar occasionally shows spectral blips:it can be used to give the story verisimilitude or be ignored like characters needing to go to the bathroom. However, when essentially all parts of the plot hinge on technology to function flawlessly and it does, then the suspension of disbelief starts to fray. Especially when the technology is supposedly widely distributed and under constant change and attack.

Note that I am willing to cut the Daemon itself slack: just like hard science fiction stories are allowed one acceptable break from physics (typically a FTL drive) without loosing too much, I think a technological story can allow itself one flawless technology. The problem is when it is everywhere.

Together, the two kinds of utopianism makes Freedom(tm) didactic, preachy and far less interesting than the open-ended Daemon. The novel, despite its multiple subplots, is essentially a linear game leading up to a somewhat anticlimactic boss fight. It has some neat ideas, but while Daemon threw in ideas and allowed them to blossom into disturbing possibilities Freedom(tm) forces them to all aim at a happy ending, no matter what.

When reading both novels I was strongly reminded of some of my own old work: the roleplaying setting InfoWar. Me and Robert wrote it back in 1998, based on some scenario planning exercises we did in 1997. Let's ignore the fact that we were indeed almost as preachy as Freedom(tm) (and far less skilled writers). It is somewhat worrying to realize that many elements from the more dystopian models we used came plausibly true - remember, we wrote this three years before the War on Terror and 15 years before Snowden. In our setting there is also a darknet fighting the Powers That Be, although in this case held together mostly by ideology and crypto-currencies (in these bitcoin-saturated days it actually feels odd that digital currencies does not play a larger role in Suarez novels - until you realize that Daemon was written in 2006, and bitcoin showed up in 2008). I can totally imagine awesome crossovers between the settings.

Another work that come to mind was Charles Stross' excellent Halting State. While InfoWar had similar ideas about distributed high-tech subversion and rebellion as Suarez novels, Halting State is the great MMORPG novel. He covers some of the same ground as Suarez in analysing just how pervasive the digital world has become in the "real" world, but also in playing with just how powerful online games can be as recruitment, coordination, funding and communication. What makes Halting State work so well is that it doesn't up the ante to global conflict (although Suarez seems to make "global = US") or have the stakes be too high - yes, they are very high for the protagonists, but it really is just a local issue. I think this is the more European approach: things can matter even if they just involve the Republic of Scotland.

In the end, good science fiction (and thrillers) deal in the currency of ideas. The best ideas are the fertile ones, the ones that trigger new questions and ideas. Daemon is full of them and allow them free rein; Freedom(tm) ironically doesn't give them enough freedom.

June 05, 2014

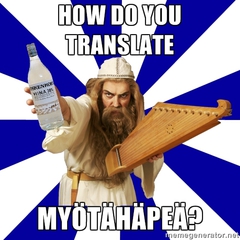

Myötähäpeä and surprise shadows

Today I learned a new Finnish word that I think we should introduce in English: Myötähäpeä. Kaj Sotala described it as "co-shame" or "shared shame": "Feeling bad as a result of seeing someone else do something that causes them to embarrass themselves in the eyes of others." It is a kind of empathetic opposite to Schadenfreude.

Today I learned a new Finnish word that I think we should introduce in English: Myötähäpeä. Kaj Sotala described it as "co-shame" or "shared shame": "Feeling bad as a result of seeing someone else do something that causes them to embarrass themselves in the eyes of others." It is a kind of empathetic opposite to Schadenfreude.

I find it interesting that there seem to be many more "untranslatable" (i.e. unique to one language) words for mental states than other things (the other standard category where cultures differ a lot is words for relatedness). Just look at this and this. These lacuna words are largely semantic gaps: one could usually just add a word for the concept with the right grammatical form and everything would work. The mental states themselves often carry over with no problem between cultures, although how often they would come up or how one would behave and express them would vary a lot. The fact that the states are so often in just one language suggests that it is more these uses than the state itself that determine whether they get to be a word or not.

Every morning when I leave home I pass by my gate post, and I briefly look at the flat stone surface for "concept of the day". Today it was "surprise shadow" - the unexpected confluence of a shadow with a particular surface. Yesterday it was "the feeling of dryness due to sun after a rain". Other fun examples have been "the feeling of missing a habitual action", "awareness of the ubiquity of very pale green colours" and "potential white dust too small to see that may or may not have been there". It is a fun exercise.

It also demonstrates that it is very easy to construct new concepts: each of us could make literally thousands every day, yet our languages at most run into hundreds of thousands of core concepts. For the rest, we combine the others rather than invent a new proper concept and try to introduce it into language. This is a kind of competition process: myötähäpeä is far more relevant, cohesive and useful than surprise shadows.