October 07, 2010

Why early singularities are softer

Carl Shulman from SIAI and me gave a talk yesterday at ECAP10: "Implications of a Software-limited Singularity". We give an argument for why - if the AI singularity happens - an early singularity is likely to be slower and more predictable than a late-occurring one.

Carl Shulman from SIAI and me gave a talk yesterday at ECAP10: "Implications of a Software-limited Singularity". We give an argument for why - if the AI singularity happens - an early singularity is likely to be slower and more predictable than a late-occurring one.

Here is the gist of our argument:

Some adherents to the singularity hypothesis (Hans Moravec, Ray Solomonoff and James Martin, for example) use variants of the following "hardware argument" to claim we will have one fairly soon:

- Moore's law will lead to cheap human-level CPUs by, e.g. 2040.

- Cheap human-level CPUs will lead to human-level AI within 30 years.

- Human-level AI will lead to an intelligence explosion.

Therefore,

- Intelligence explosion by 2070.

Premise 1 is pretty plausible (I was amazed by the optimism in the latest semiconductor roadmap). Premise 3 is moderately plausible (skilled AI can be copied and run fast, and humans have not evolved to be optimal for AI research). Premise 2 is what critics of the argument (Lanier, 2000; Hofstadter, 2008; Chalmers, 2010) usually attack: just having amazing hardware doesn't tell us how to use it to run an AI, and good hardware is not obviously going to lead to good software.

A sizeable part of our talk dealt with the evidence for hardware actually making AIs perform better. It turns out that in many domains the link is pretty weak, but not non-existent: better hardware does allow some amazing improvements in chess, text processing, computer vision, evolving new algorithms, using larger datasets and - through the growth of ICT - more clever people getting computer science degrees and inventing things. Still, software improvements often seems to allow bigger jumps in AI performance than hardware improvements. A billionfold in hardware capacity since 1970 and a 20-fold increase in the number of people in computer science has not led to an equally radical intelligence improvement.

This suggests that the real problem is that AI is limited by serial bottlenecks: in order to get AI we need algorithmic inventions, and these do not occur much faster now than in the past because they are very rare, requires insights or work efforts that doesn't scale with the number of researchers (10,000 people researching for one year likely cannot beat 1,000 people researching the same thing for ten years) and experiments must be designed and implemented (takes time, and you cannot move ahead until you have the results). So if this software bottleneck holds true we should expect slow, intermittent and possibly unpredictable progress.

When you run an AI, its effective intelligence will proportional to how much fast hardware you can give it (e.g. it might run faster, have greater intellectual power or just able to exist in more separate copies doing intellectual work). More effective intelligence, bigger and faster intelligence explosion.

If you are on the hardware side, how much hardware do you believe will be available when the first human level AI occurs? You should expect the first AI to be pretty close to the limits of what researchers can afford: a project running on the future counterpart to Sequoia or the Google servers. There will not be much extra computing power available to run more copies. An intelligence explosion will be bounded by the growth of more hardware.

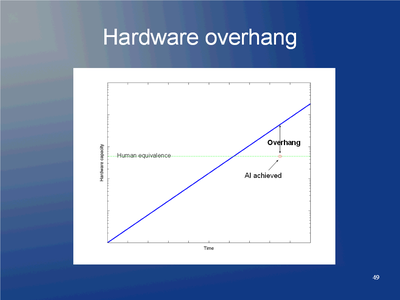

If you are on the software side, you should expect that hardware has continued to increase after passing "human equivalence". When the AI is finally constructed after all the human and conceptual bottlenecks have passed, hardware will be much better than needed to just run a human-level AI. You have a "hardware overhang" allowing you to run many copies (or fast or big versions) immediately afterwards. A rapid and sharp intelligence explosion is possible.

This leads to our conclusion: if you are an optimist about software, you should expect an early singularity that involves an intelligence explosion that at the start grows "just" as Moore's law (or its successor). If you are a pessimist about software, you should expect a late singularity that is very sharp. It looks like it is hard to coherently argue for a late but smooth singularity.

Discussion

This is a pretty general argument and maybe not too deep. But it does suggest some lines of inquiry and that we might want to consider policy in the near future.

Note that sharp, unpredictable singularities are dangerous. If the breakthrough is simply a matter of the right insights and experiments to finally cohere (after endless disappointing performance over a long time) and then will lead to an intelligence explosion nearly instantly, then most societies will be unprepared, there will be little time to make the AIs docile, there are strong first-mover advantages and incentives to compromise on safety. A recipe for some nasty dynamics.

So, what do we do? One obvious thing is to check this analysis further. We promise to write it up into a full paper, and I think the new programme on technological change we are setting up in Oxford will do a lot more work on looking at the dynamics of performance increases in machine intelligence. It also suggests that if AI doesn't seem to make much progress (but still does have the occasional significant advance), leading us to think the software limit is the key one, then it might be important to ensure that we have institutions (such as researchers recognizing the risk, incentives for constraining the spread and limiting arms races, perhaps even deliberate slowing of Moore's law) in place for an unexpected but late breakthrough that would have a big hardware overhang. But that would be very hard to do - it would almost by definition be unanticipated by most groups, and the incentives are small for building and maintaining extensive protections against something that doesn't seem likely. And who would want to slow down Moore's law?

So if we were to think a late sharp AI singularity was likely, we should either try to remove software limitations to AI progress in the present (presumably very hard, if it was easily doable somebody would have done it) or try to get another kind of singularity going earlier, like a hardware-dominated brain emulation singularity (we can certainly accelerate that one right now by working harder on scanning technology and emulation-related neuroscience). If we are unlucky we might still get stuck on the neuroscience part, and essentially end up with the same software limitation that makes the late AI singularity risky - hardware overhang applies to brain emulations too. Brain emulations might also lead to insights into cognition that triggers AI development (remember, in this scenario it is the insights that are the limiting factor), which in this case would likely be a relatively good thing since we would get early, hardware-limited AI. There is still the docility/friendliness issue, where I think emulation has an advantage over AI, but at least we get more time to try.

Posted by Anders3 at October 7, 2010 11:49 AM