February 23, 2009

The Future of Smart: Intelligence in 2034

This is an essay based on the speech I gave yesterday on my friend David Wood’s birthday party and on discussions I had with the other guests.

This is an essay based on the speech I gave yesterday on my friend David Wood’s birthday party and on discussions I had with the other guests.

One of the tricks in trade among futurologists is to look equally far back as one is trying to look forward, and try to learn from the things that have changed in the meantime. So when I look back 25 years to 1984 I see myself playing with my Sinclair ZX Spectrum (48 kilobytes of memory! 256×192 screen resolution! 16 colors!) On the Swedish state television (only two channels) someone was demonstrating that you could use a home PC to balance your check book or store recipes. That year Apple would launch the famous 1984 advert announcing the Macintosh rebelling against the authoritarian power of IBM.

What could we have predicted in 1984 about the computers in 2009, and what would we have missed? I think most people already knew that computers were getting cheaper, smaller and better. So it would have made sense for us to think that home computers would be in every home. They would also be much better than the ones we had then. But we would have had a far harder time predicting what they would be used for. It was not obvious that the computer would eat the stereo.

The biggest challenge to imagination was the Internet – already existing in the background, foreshadowed by the spread of bulletin board systems run by amateurs (who were already pirating software and spreading *very* low-resolution naughty pictures). The Internet changed the rules of the game, making the individual computers far more powerful (and subversive) by networking them. From what I remember of early 80’s computer speculation the experts were making the same mistake as the television program: they could see uses in communication, data sharing and doing online shopping, but not Wikipedia, propaganda warfare, spam, virtual telescopes and the blogosphere.

Moores law exists in various forms, but the one I prefer to use is that it takes about 5.6 years for the commodity computer performance to become ten times better per dollar. That means that 25 years is worth 30,000 times as much computer power per dollar. This means not just more and more powerful systems, but also smaller and more ubiqitious ones. Most of us do not need supercomputers (except for computer games, one of the big drivers of computer power). But we get more and more computers in more and more places. Some current trends are rebelling against the simple “more power” paradigm and instead looking for more energy-efficient or environmentally friendly computers. That way they can become even more ubiquitous.

Maybe the biggest innovation over the next 25 years will be the biodegradable microprocessor, perhaps printed on conductive and bendable plastic. Just like the RFID tags (which will also be everywhere and possibly merged into the computers) they can be put everywhere. Every object will be able to network, and know what it is, where it is, what it is doing, who owns it and how it is to be recycled.

A world of smart objects is a strange place. Thanks to the environment (and of course one’s personal appliances) one will (in the words of Charles Stross) never be lost, never alone and never forget. It will be a googleable reality. Privacy will be utterly changed, just as concepts of property and security. But just like it was hard in 1984 to imagine Wikipedia we cannot really imagine how such a world is used. The human drivers will be the same, most of the things and services we have today will of course be around in some form, but the truly revolutionary is what is built on top of these capabilities. It would be a world of technological animism, where every little thing has its own techno-spirit. The freecycling grassroots movements that currently avoid waste by giving away things they do not need to other members have become possible/effective thanks to the Internet. In a smart object world a request for a widget may trigger all nearby widgets to check how often they have been used… the unused widgets to ask their owners if they rather not give them away. Less waste, a more efficient market and possibly also stronger social interactions are the result.

The real wildcard in the world of smarts over the next 25 years is of course artificial intelligence. Since day one the field has made overblown claims about the imminence of human-level intelligence, only to have them thrown back in its face shortly afterwards. Yet the field has made enormous and broad progress, it is just that we seldom notice it since AI is seldom embodied in a beeping kitchen robot.

The most impressive AI application right now might not be Deep Blue’s win over Kasparov in 1997 but the rapid improvement of driving in the DARPA grand challenge for driverless cars. Having vehicles get around in a real environment with other vehicles and obey traffic rules is both a very hard and a very useful problem. If the current improvements continue we will see not just military unmanned ground vehicles before 2034, but likely automated traffic systems. Given that deaths in traffic accidents outnumber all other accidental deaths and that cars are already platforms with rapidly growing computer capacity, car automation has a good chance to become a high priority. The individual car smarts become amplified by networking: when the system of cars “knows” where they are going, where they are and what their individual conditions are they can produce emergent improvements such as forming groups moving together, detect accidents or road faults, learn how to navigate treacherous parts – or emergently invent/learn how to plan their traffic better. Whether humans will give up their power over their cars remains to be seen (there are many issues of trust, legal responsibility, the desire for control), but sufficiently good smart traffic systems could become a strong incentive.

I do not see any reason why we could not create software “as smart as” humans or smarter, however ill defined that proposition is. Human thought processes occur embedded in a neural matrix that did not evolve for solving philosophy problems or trading on stock markets – such abilities are the froth on top of a deep system of survival-oriented solutions. That is why it might be easier to make intelligent machines than machines that actually survive well in a real, messy physical environment (most current robots cheat by working in simple environments, by being designed for particular environments or just being rugged enough not to care – but you would not want that for your kitchen robot). But in the environment of human thought, communication and information processing might be ideal for artificial intelligences.

I would give the emergence of “real” AI a decent chance of happening over the next 25 years. I also see no reason why it would be limited to mere human levels of intelligence. But I also believe that intelligence without knowledge and experience is completely useless. So the AI systems are going to have to learn about the world, essentially undergoing a rapid childhood. That will slow things down a bit. But we should not be complacent: once an AI program has learned enough to actually work in the real world, solving real problems, it can be copied. The total amount of smarts can be multiplied extremely rapidly this way. Just like networking makes smart objects much more powerful, so can copying of smart software make even expensive to make smarts very cheap and ubiquitous.

I do not think the AI revolution is likely to be heralded by a strong superintelligence calling all phones simultaneously to tell mankind that there is a new boss. I think it will rather be heralded by the appearance of a reasonably cheap personal secretary program. This program understands people well enough to work as a decent assistant on everyday information tasks – keeping track of projects, filling in details in texts, gathering and ordering information, suggesting useful things to do. If it works, it would improve the efficiency of people, in turn improving the efficiency of the economy worldwide. It might appear less dramatic than the phonecall from the AI-god, but I suspect it will be much more profound. Individuals will be able to do much more than they could before, and they will be able to work better together. As intelligence proliferates everywhere things will speed up. Including, of course, the development of better AI (especially if the secretary software is learning and sharing skills). Rather than a spike of AI divinity we get a swell of a massively empowered mankind.

Intelligence isn’t everything, in any case. One can do just as well with a lot of information. As the saying goes, data is not information is not knowledge is not wisdom. But one can often extract one of the later from one of the earlier. If you have enough information and a clever way of extracting new information from it, you can get what *looks like* superintelligence from it. Google is a good example: using a relatively simple algorithm it extracts very useful information from the text and link structure of web pages. The “80 Million Tiny Pictures” project demonstrated that having enough examples and some semantic knowledge was enough to do very good image recognition. Interface the AI secretary with Google 2.0 and it would appear much smarter, since it would be drawing from the accumulated knowledge of the whole net.

The classical centralized “mind in a box” is intensive intelligence, while the distributed networks of intelligence are extensive intelligence. Intensive intelligence thrives in a world of powerful processors and centralized information. It is separated from the surrounding world by some border, appearing as an individual being. Extensive intelligence thrives in a world of omnipresent computer power, smart objects and fluid networks. It is not being-like, but more of a process. We might have instances already in the form of companies, nations and distributed problem solving like human teams playing alternate reality games. As time goes on I expect the extensive intelligences to become smarter and more powerful, both because of the various technological factors I have mentioned earlier but also because we will work hard on designing better extensive intelligence. Figuring out how to make a company or nation smart is worth a lot.

The real challenge for this scenario might be the right interface for all the smart and intelligent stuff. As the Microsoft paperclip demonstrated, if a device does not interact with us in the way we want it will be obnoxious. On my trip to London the nagging of the car GPS was a constant source of joking conversation: it was interrupting our discussions, it had a tone suggesting an annoyed schoolmarm, it was simply not a team player. Smart objects need to become team players, both technologically and socially. That is very hard, as anybody who has tried to program distributed systems or design something really simple and useful knows. Designing social skills into software might be one of the biggest businesses over the next 25 years. Another approach is to make the smart objects mute and predictable: rather than having unsettling autonomy and trying to engage with us they are just quietly doing their jobs. But under the hood the smarts would be churning, occasionally surprising us anyway.

Can we humans keep up with this kind of development? We are stretched but stimulated by our technological environment. It is not similar enough to our environment of evolutionary adaptedness, so we suffer obesity, back pain and information stress. But we also seem to adapt rapidly to new media, at least if we grow up with them or have a personal use for them. The Flynn effect, the rise of scores on IQ tests in most countries over time, could in part be due to a more stimulating environment where the important tasks are ever more like IQ test questions. Television show plots are apparently growing more and more complex and rapid, driven by viewer demands and stimulating viewers to want more complexity. Computer games train attention in certain ways, making players better at certain kinds of hand-eye coordination and rapid itemization. Whether these changes are good is beside the point; they have their benefits and drawbacks. What they seem to suggest is that we will definitely think differently in a smarter environment.

We can of course enhance ourselves directly. Current cognition enhancing drugs seem able to improve aspects of memory, attention and alertness, and possibly also affect creativity, emotional bonding or even willpower. These effects have their limits and are probably task-specific: the kind of learning ability that is useful for cramming a textbook might not be useful for learning a practical skill, the narrow attention that is great in the office is dangerous on the road. I believe that by 2024 cognition enhancers will be widespread, but their use will be surrounded by a culture of norms, rules-of-thumb and practical knowledge about what enhancer to take in what situation. Not unlike how we today use and mis-use caffeine and alcohol. If we are clever we can make use of the smart environment to support good decisionmaking about our own bodies and minds.

While brains can be trained (for example by computer games intended to stimulate working memory) we might wish to extend them. We can already make crude neurointerfaces that link brains to computers. It is just that the bandwidth is very small and signals are usually sent one-way. More advanced interfaces are on their way, and by 2024 I expect them to have improved the quality of life of many currently profoundly disabled people enormously. But even if the neurointerfaces are worthwhile for someone lacking limbs or a sense, suffering from paralysis or other brain disorders it does not mean they are worth the cost, risk and effort for healthy people. To be viable a neurointerface has to be as cheap, safe and easy to use as lasik surgery. That will likely take a long while. But once the “killer application” is there (be it obesity or attention control) other applications will follow and before long the neurointerface might become a platform just like the cellphone for various useful and life-transforming applications. I’d rather not be the guinea pig, though.

Since I am blondish I think I am allowed to tell a blonde joke:

- How do you confuse a blonde?

- You put him in a circular room and tell him to sit in the corner.

- How does a blonde confuse you?

- He comes out and says he did it.

Intelligence is surprising. That is the nature of truly intelligent thought: it is not possible to predict without having the same knowledge and mental capacity. Since there are so many different kinds of possible minds and situations we should expect the unexpected. We *know* we are going to be surprised in the future. So we should adapt to it and try to make sure the surprises will be predominantly nice surprises.

A world with thousands or millions of times as much smarts as the present will be very strange. Raw intelligence may prove less important there than other traits, just as physical strength in a mechanized world no longer is essential. Instead soft things like social skills, moral compass, kindness and adaptability may become the truly valued traits.

February 20, 2009

Enhance me naturally so I can help others

At last my friend Lena's article is out: Enhancing concentration, mood and memory in healthy individuals: An empirical study of attitudes among general practitioners and the general population -- Bergström and Lynöe 36 (5): 532 -- Scandinavian Journal of Public Health.

At last my friend Lena's article is out: Enhancing concentration, mood and memory in healthy individuals: An empirical study of attitudes among general practitioners and the general population -- Bergström and Lynöe 36 (5): 532 -- Scandinavian Journal of Public Health.

Enhancing for altruistic reasons (to help others) was also much more acceptable to the study participants than enhancing for one's own interest. Maybe that is just Swedish egalitarianism, but it could also be that many are unwilling to allow enhancement if it is perceived as giving a few unfair advantage.

Whether the study shows that people are against enhancement or not, is less clear. It was pretty clear that the majority in each case did not think enhancers should be prescribed. The most accepted enhancement was concentration enhancement benefiting society, reaching 32.7% among the general public; the least popular case among the public was selfish mood enhancement, 10.1. For GPs, the most accepted was altruistic mood, 22.8%, and the least accepted was also selfish mood enhancement, just 2.0%. But this shows that there is a pretty sizeable subset of the public and GPs who are fine with enhancement. That it is smaller than the number in self-selected surveys like Nature or Aftonbladet is not surprising. In a real situation I think people are more likely to use enhancers themselves than statements about the permissibility in general would suggest. The Riis, Simmons and Goodwin study shows a pretty strong decoupling between willingness to enhance (affected by self-image?) and willingness to allow others to enhance (affected by social ideas?). Similar to this study, Riis et al also saw that mood was more controversial to enhance than cognition - yet people are taking significant amounts of antidepressants.

The study also demonstrates that people allow enhancement much more readily when done by a "natural remedy" than with a "pharmaceutical". The increase in acceptability on the order of 30%. Clearly the naturalness has a strong effect on people's perception on the acceptability. Maybe it is the fear of overstepping "the natural order" that is hiding here: if you can get an effect by a "natural" tool, then it must have been meant to be found. This is of course not very convincing philosophically speaking, but a lot of this debate is not about neat ethics but the rather thick choices people make when they define themselves and their lives in terms of cultural constructions such as their view of nature.

February 17, 2009

It is my memory, and I'll edit it if I want to

Practical Ethics: Am I allowed to throw away *my* memories: does memory editing threaten human identity? - I blog about the recent paper where propranolol was used to weaken an induced fear response.

Practical Ethics: Am I allowed to throw away *my* memories: does memory editing threaten human identity? - I blog about the recent paper where propranolol was used to weaken an induced fear response.

No news for anybody who have read my paper or earlier blogs on it.

February 13, 2009

Digga Darwin

Everybody seems to be celebrating Charles Darwin's bicentenary in different ways. During a talk I played around with setting up a little evolutionary process just for fun.

Agents move around on Botticelli's Venus, leaving coloured trails. They get scores based on how well the color of the pixel they enter matches some of their genes, and move depending on other genes. Every iteration the lowest-scoring agent is removed and replaced with a mutated copy of another one. The result is that agents evolve the ability to find areas that give them higher scores - or create them.

Ok, the result is at best like Rauschenberg and at worst just art vandalism. But it is pretty amazing that this very simple rule could rapidly generate - the fitness function is not trivial, the environment is changing and there are co-evolving organisms.

Celebrating Darwin is often conflated with celebrating the theory of evolution. But even without the discovery of his big theory he would have been a great naturalist. His studies on ecology, botany, hybridization, agriculture or other areas of biology would have been enough. One of my favourites is The Expression of the Emotions (1872), which both demonstrates the universality of many human emotions, their similarities with animal behaviour and lays the groundwork for examining emotion in a naturalistic way:

The term "disgust," in its simplest sense, means something offensive to the taste. It is curious how readily this feeling is excited by anything unusual in the appearance, odour, or nature of our food. In Tierra del Fuego a native touched with his finger some cold preserved meat which I was eating at our bivouac, and plainly showed utter disgust at its softness; whilst I felt utter disgust at my food being touched by a naked savage, though his hands did not appear dirty. A smear of soup on a man's beard looks disgusting, though there is of course nothing disgusting in the soup itself. I presume that this follows from the strong association in our minds between the sight of food, however circumstanced, and the idea of eating it.

It would have been fun to know what the native thought about the encounter with British "food".

While Darwin was not alone in thinking about evolutionary processes, I think he contributed much to the success of evolution theory by gathering strong and wide-ranging supporting data. One could easily imagine a situation like Alfred Wegener's plate tectonics, where a good idea would have languished for a long time (or even been apparently disproven) by lack of data. There is a lesson there for all who think they have a great insight: it is not enough to be right, you need to assemble a mountain of evidence too.

Turning to evolution, what I think is most amazing is not that local, short-sighted events produce a global "rational" outcome (although that is pretty amazing too!) Many other processes have this property, such as relaxation methods in mathematics or markets. The unusual property of evolution (shared with some markets) is that it is highly creative: instead of converging to something optimal, it tends to produce variations that grow into new directions. It finds new niches and new ways of exploiting niches. New kinds of bodies allow additions to the process, be it social organisation, learning, extended phenotypes and cultural transmission.

This creativity likely cannot happen in simple environment or with simple bodies. There must be differing environmental demands and sufficiently open-ended development systems to drive it. This is why my Botticelli-eaters will never evolve much further than in the image above: their environment has a pretty low complexity, their "bodies" are specified by a very limited number of parameters. There is a bit of a loophole in that different "species" can affect each other, but the overall effect is small. In the real world we have a complex environment with different phases of matter, different concentrations of matter and energy with varying statistical distributions on different timescales, and biological structures built up from the inside using a very versatile and open-ended code and machinery.

Darwin seems to have appreciated the strong links between individual organisms, their local communities, the ecosystem, the physical environment and their past. I think (although I'm hardly an expert on this) that this was a very early and fairly uncommon insight. He recognized that evolution is interactive and not just something that is imposed from the outside. It is bottom-up: a multitude of interactions meet in every organism, being refracted into development and behaviour that produces new interactions.

Thus, from the war of nature, from famine and death, the most exalted object of which we are capable of conceiving, namely, the production of the higher animals, directly follows. There is grandeur in this view of life, with its several powers, having been originally breathed into a few forms or into one; and that, whilst this planet has gone cycling on according to the fixed law of gravity, from so simple a beginning endless forms most beautiful and most wonderful have been, and are being, evolved.

(the title of the post refers to a song by the Swedish humorist Povel Ramel celebrating Darwin)

February 12, 2009

Using the country as a map of itself

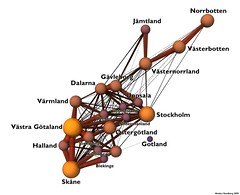

The physics arXiv blog posts about Simulating Sweden, a paper about MicroSim, a large scale epidemic simulation that contains official data on all registered Swedish inhabitants.

The physics arXiv blog posts about Simulating Sweden, a paper about MicroSim, a large scale epidemic simulation that contains official data on all registered Swedish inhabitants.

The amusing thing with the blog is the shocked tone:

That makes for potentially fantastic simulations but it also raises extraordinary questions over privacy. The data is only minimally anonymized: each individual is given a random identifier but otherwise their personal data is intact.Given that the team is combining data from three different sources, this doesn’t sound like nearly enough protection.

But Brouwers must know what she’s doing. Or at least be praying that the rest of Sweden doesn’t find out what she’s done.

Sometimes it is revealing to see oneself with outside eyes. What would appear to an American as a horrific crime against privacy would most likely be regarded by most swedes as a mildly good thing. After all, figuring out how to deal with avian flu is sensible, right? And the individuals in the simulation have their personal identity numbers replaced with a random code. And the Central Bureau of Statistics has given ethics approval.

Many of the results are eminently useful (just look at these *one million men* cohort studies of the link between IQ and cancer and homicide risk). I might not be in the epidemic simulation, but I am a datapoint in the cohort studies.

We have moved pretty far from project Metropolit in 1986, where the public discovery of a sociological research project that mapped the lives of 15,000 Stockholmers led to its official closure. Today nobody minds that government databases are combined in the population and housing census (which since 2005 has been entirely digital (pdf)). As an article on integrity in Forskning & Framsteg 4/07 (in Swedish) points out, the debate about integrity ended in the 80's (what remains may be more of an educated elite project) and as Swedes' trust in their government has increased, computers became everyday and many useful services have been built on the databases people have simply become used to being mapped. It looks like the classic "boiled frog scenario".

Yet if the aim of the heating is only to keep the frog warm and cozy, the transition might be benign. The problem is that the frog is in a situation where the heat could easily be turned up - deliberately or accidentally. Governments have in the past done this (including the Swedish one). In a situation where people do not trust their government they will at least try to keep track of what it is doing to them, and in a situation where the government is perceived as incompetent people might refuse to give it control over sensitive parts of their lives.

February 11, 2009

Opaque preferences in transparent skulls

Practical Ethics: Transparent brains: detecting preferences with infrared light - I blog on the possible problematic consequences of the cool study that demonstrated that one can detect preferences using infrared scans of the frontal lobes. The basic problem is that this is prime material for neurohype that ignores that real preferences are pretty complex. Simple scanning methods invite wider use than complex ones, so I see a real risk that this result will be used in a lot of stupid ways.

Practical Ethics: Transparent brains: detecting preferences with infrared light - I blog on the possible problematic consequences of the cool study that demonstrated that one can detect preferences using infrared scans of the frontal lobes. The basic problem is that this is prime material for neurohype that ignores that real preferences are pretty complex. Simple scanning methods invite wider use than complex ones, so I see a real risk that this result will be used in a lot of stupid ways.

However, it is an interesting question how a society with transparent preferences would work. Right now we have one where preferences can be partially inferred (and a lot of people *think* they are good at discerning the thoughts and preferences of others - often wrongly).

If some groups can see the preferences of others but they cannot look back, then the information balance is going to be problematic. So clearly a transparent preference society needs to be two-way transparent to be just. Similarly it needs to be highly tolerant in order not to become highly conformist. The same problems as for any transparent society, really.

Preferences likely need to be elicited to be perceived. So I will notice that somebody checks whether I prefers Coke over Pepsi, boys over girls or armiphlanges over unclefts. The elicitation process is likely to introduce noise or bias, so this would be the weak point in trying to figure out my real preferences. Beside the complexity itself of preferences: I suspect most real preferences are strongly situational and nontransitive.

But would the extra preference information make the economy more efficient? It might certainly make advertising a bit better and more personalised, but in many markets the benefit of personalised information is smaller than the cost, especially if preferences are naturally muddled.

An interesting application might be to check one's own preferences. How much do I *really* like that painting? I'm experiencing some doubts, but my brain response was pretty clear... aha, it is my elitism that makes me hide that I actually do like rainbows and unicorn paintings. Might be useful, might just be a way of setting up a rationalizing feedback.

February 10, 2009

How to accidentally mislead experts

I'm very fond of information visualisation and communications issues (copies of Tufte everywhere, I read Information Aesthetics every morning...) Beside the enjoyment of good design and the epistemic insights from well presented information there is the equally important matter of how to discern when and how information is misleading. The most common case is that mis-presented information misleads amateurs or outsiders in the field, but here is an interesting example of how it can mislead experts just outside the community producing the information, while amateurs do not fall into the same trap.

I'm very fond of information visualisation and communications issues (copies of Tufte everywhere, I read Information Aesthetics every morning...) Beside the enjoyment of good design and the epistemic insights from well presented information there is the equally important matter of how to discern when and how information is misleading. The most common case is that mis-presented information misleads amateurs or outsiders in the field, but here is an interesting example of how it can mislead experts just outside the community producing the information, while amateurs do not fall into the same trap.

Eric K. Drexler has a great post, Nanomachines: How the Videos Lie to Scientists that describe how a certain kind of nanotechnology visualisations may have been very misleading - to the professionals in the field. He writes:

By now, many scientists have seen videos of molecular-scale mechanical devices like the one shown here, and I have no way to know how many have concluded that the devices are a lot of rubbish (and have perhaps formulated an unfortunate corollary regarding your author). The reasons for this conclusion would seem clear and compelling to someone new to the topic, but because I was too close to it, I saw no problem. Familiarity with the actual dynamics of these devices kept me from recognizing that what the videos seem to show is, in one crucial respect, utterly unlike reality.

The problem is that in a typical animation of molecular machinery parts the speed of motion of the machine is on the same order as the thermal vibrations. This produces an exciting image (wobbling atoms, molecular gears turning) but as Drexler notes, someone who understands molecular systems (but are not versed in the assumptions of the machine) will think that there will be a lot of coupling between the machine parts and thermal motion - lots of friction and heat dissipation. Hence they would just assume that the system will be unworkable.

The intended speed of the parts is several orders of magnitude lower, but that would not make an exciting animation (see some examples in Eric's post). So the visual demands of a striking image, together with the difficulty of recognizing that what will be perceived by a certain group is not what is intended, has led to a plethora of misleading visualisations.

The problem is probably pretty common. Not even researchers understand error bars, and in general few readily understand how experts from other disciplines would (mis)interpret their information. Add to this the illusion of transparency bias that makes us overestimate how well we understand how others think, and it is likely that a lot of professionals disagree due to minor miscommunications. It could be small and localized, like this example where a kind of visualisation does the damage, or it could be more fundamental due to deeper discipline differences ("cornucopian" economists vs. "Malthusian" ecologists might be an example).

Non-experts will of course be misled quite often too, but this is widely recognized and people work hard to fix it. Much less work is spent on making sure experts interpret the information right. Good visualisations are about communication. Sometimes that might necessitate making one for the experts (showing nearly motionless gears with thermal motion, or moving gears with blurred atoms) and one for the non-experts (Look! It is a gear! Made of atoms! That wobble!)

February 09, 2009

Xkcd understands me!

![]() xkcd - A Webcomic - TED Talk brings up an issue I have been agonizing over for many years: how do we end parenthetical statements with emoticons? I am not alone.

xkcd - A Webcomic - TED Talk brings up an issue I have been agonizing over for many years: how do we end parenthetical statements with emoticons? I am not alone.

I hate unbalanced recursions (this is why when someone sneezes multiple times I say the same number of "prosits" as the number of sneezes). But if the emoticon represents a unified iconic symbol then the bracket glyph does not denote its usual semantic meaning of end-bracket, and is neutral in respect to the parenthetical statement. Which would speak in favour of alternative 2. Which still looks weird.

I sometimes try to leave an extra space, but then it just look like my emoticon has a turkey-neck:

Linux (or BSD :) ) would...Using inline images cannot be relied on, since the text can be read in any medium and should be backwardly compatible with everything back to ENIAC.

Maybe one could simply use different kinds of brackets when using emoticons:

Linux [or BSD :) ] would...or

Linux {or BSD :) } would...This actually looks good, especially the first case. But it requires recognizing the eventual use of emoticon at the end of the statement, otherwise it will force a brief jump backwards to edit the initial parenthesis into the right kind of bracket.

Looking at what others have blogged it seems that people have come up with various solutions. One nice possibility is to let the emoticon "bubble" up one level and appear right of the statement:

Linux (or BSD) :) would...The dowside is that the connection between the statement and the brief smile is lost.

In A Treatise on Emoticons it is stated that "one shall leave a space between the emoticon and the close-parenthesis." However, the motivation is more visual ("otherwise it looks weird") than the idea that emoticons terminate sentences.

I think I will go for either the space separator or use of other brackets {which has the useful property that one can also more easily parse multi-level statements [like this one :-)]}.

February 06, 2009

Built up those prefrontal muscles!

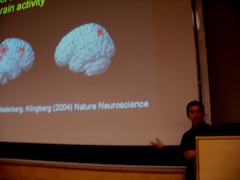

Changes in Cortical Dopamine D1 Receptor Binding Associated with Cognitive Training -- McNab et al. 323 (5915): 800 -- Science shows a cute result of training working memory: increased dopamine D1 receptor binding. This might explain how the improvement in working memory comes about. Since Torkel has earlier used the same training to treat kids with ADHD, a re-tuning of dopamine might make sense.

Changes in Cortical Dopamine D1 Receptor Binding Associated with Cognitive Training -- McNab et al. 323 (5915): 800 -- Science shows a cute result of training working memory: increased dopamine D1 receptor binding. This might explain how the improvement in working memory comes about. Since Torkel has earlier used the same training to treat kids with ADHD, a re-tuning of dopamine might make sense.

Wild speculation: I wonder if it also has effects on the reward system and hence personality? It could also change the setpoint of the inverted-U curve of dopamine response.

February 02, 2009

The knees of Carlson's, Smart's and Moore's laws

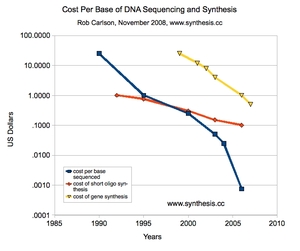

Gene Synthesis Cost Update by Rob Carlson shows an interesting update of his earlier graphs of the cost of sequencing and gene synthesis. His "law(s)" remain on track, with gene sequencing costs per base pair declining about 0.3 orders of magnitude per year and gene synthesis going down perhaps 0.2 orders of magnitude per year. It seems that short oligo synthesis is the slowest to improve, just declining one order of magnitude in ~15 years. So is this going to be the future bottleneck on the synthesis side?

The rapid decline of sequencing cost means that in the future gene fingerprinting of food (what species was it? is it GMO? any harmful bacteria?), the environment (what species are around?) and people is very likely.

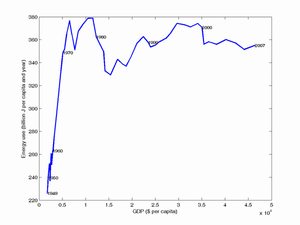

But then again, one should never trust just a graph. Here is a favourite plot of mine, US energy use per capita (vertical) vs. US GDP per capita (I saw it in a presentation by John Smart). Up until 1973 it was an amazingly straight (or even exponential) curve. One can easily imagine an economist predicting a firm relationship between energy use and GDP. Then the curve suddenly turned on a dime and became roughly horizontal for the next 35 years.

What happened at the knee of the curve was the oil crisis, which suddenly made energy usage price-limited. Despite a continuing rise in living standard (the continuing rightward move in the plot) energy use remained essentially constant thanks to less waste, technological improvements etc.

The interesting aspect is that the knee in the curve was to my knowledge not predicted beforehand, and was likely inconceivable in the previous period - a bigger economy *obviously* needs more resources! (many people clearly think so today, too.)

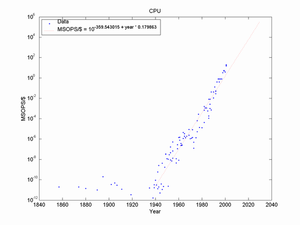

Even Moore's law has a knee! Using Bill Nordhaus data I got this picture:

Here the knee was the introduction of the electronic and/or programmable computer, triggering exponential growth. The fun thing with Moore's law is that here people have been arguing since day one that it will end (Moore himself suggested that it would end in his 1965 paper) but it has refused to budge. Perhaps because it is too *useful* to be given up: people will do their best to drive it further.

This suggests that Carlson's laws will persist for a long while, they have strong support (unlike the energy/GDP relation, which nobody truly cared about) and finds new uses (which is largely why computers took off).

But we should remember that past experience also suggests that inconceivable knees can show up with surprising speed.

Can we win the War on Aging?

Julian blogs on Practical Ethics: Why We Need a War on Aging - a post based on his presentation at the 2009 World Economic Forum (with support from Nick Bostrom and Aubrey de Grey).

Julian blogs on Practical Ethics: Why We Need a War on Aging - a post based on his presentation at the 2009 World Economic Forum (with support from Nick Bostrom and Aubrey de Grey).

I agree, of course. It is just that I'm worried that the "war on ageing" might be as inefficient as the wars on cancer, drugs and terrorism (cf. these scenarios). There is a real risk in focusing on symptoms rather than causes when fighting this kind of "war". In the case of ageing the goal is at least pretty clear (the decline in health caused by ageing damage caused by metabolism evolved with little regard for the soma), but "fixing ageing" is not just about the biomedicine but also dealing with how to organise a less ageing society.

People love to bring up objections like overpopulation, pensions or static job markets as if they were substantial objections to the whole project. But that is like arguing (back in the 1800's) that we should continue slavery, since the economic and social impact of abolition would be enormous. Those anti-abolition arguments were actually correct: the impact was big. Yet the moral motivation for abolition was stronger both then and in retrospect. The fact that one could tear up a sizeable economic and social system and handle the consequences is actually good evidence that we could do something similar for ageing (especially since the death and pain toll of ageing is far, far higher than slavery ever achieved). We might have to have fewer children or have mandatory career changes every century, or (more likely) we will come up with entirely new solutions to the problems. But it is unlikely we will be lamenting ageing, just as we do not lament the loss of slavery, smallpox or the divine right of kings.

February 01, 2009

Litigation, lies and libel

On practical ethics I blog about Practical Ethics: Lies, libel and layered voice analysis - how an Israeli company used UK libel law to silence Swedish researchers. Fixing UK libel law is probably one of the more important things for worldwide freedom of speech, right now it is an enormous legal exploit.

On practical ethics I blog about Practical Ethics: Lies, libel and layered voice analysis - how an Israeli company used UK libel law to silence Swedish researchers. Fixing UK libel law is probably one of the more important things for worldwide freedom of speech, right now it is an enormous legal exploit.

The critical paper in question shouldn't be too surprising. There is an awful lot of bad science sold as effective technology in this area, making lots of money and convincing decisionmakers that they have the tools for a nicely truthful surveillance society. While in reality it is largely based on deception: people who think their truthfulness can be determined are more likely to acquiesce to authorities.

The case is interesting because it also so clearly shows how vulnerable scientific publishing is to litigation threats: it is actually surprising that it happens so rarely. Maybe because from a legal point of view science is of surprisingly little importance.

I'm reading Nicholas P. Money's excellent book Carpet Monsters and Killer Spores about indoor molds, and he makes the same point. Although litigation about mold damage may become a multibillion dollar business in the US, mycologists are irrelevant to it. Nobody wants to hear scientific uncertainty in the courtroom.