October 02, 2006

The Future of Images

The latest issue of ACM Transactions on Graphics (TOG) (July 2006, 25:3) are the Proceedings of ACM SIGGRAPH 2006, Boston, MA conference so it gives a sense of where state of the art in computer graphics is. One of the strongest impressions I got was that photography is going to change. Images are becoming just as dynamic and laden with inferential promiscuity as computer-readable textual information.

Now when rendering has more or less achieved full photorealism one way to go is of course expressive non-photorealism, but merging photography with rendering (image based rendering) is also popular. And one can move into the even harder domain of video (lots of Hollywood interest of course). At the same time computer vision has become involved in manipulating photography. All this points at a situation where there is no distinction between photographing, rendering and image processing.

Daniel Cohen-Or, Olga Sorkine, Ran Gal, Tommer Leyvand, Ying-Qing Xu describe a method of harmonising colors in photos. Apparently there are a few basic "color harmonies" (like having most colors on opposite sides of the color circle, or deparated by 90 degrees etc), so by analysing the existing colors it becomes possible to nudge them to the closest harmonious color scheme. Some trickery is needed to deal with extended fields of colors so they all get transformed the same way.

Noah Snavely, Steven M. Seitz, Richard Szeliski developed a system for "photo tourism", where a large number of images from the same place (such as the results found after a Google images or Flickr search) are matched. it deduces the original camera locations and produces a 3D model of the scene and where the cameras were, enabling the user to jump between the perspectives, zooming in on details by finding pictures of them and allowing image annotation.

Erum Arif Khan, Erik Reinhard, Roland W. Fleming, Heinrich H. Bülthoff do image based material editing: from a photo enough information can be extracted to change the material of an object, turning it transparent, metallic or anything else. The trick is of course that the eye doesn't notice the pretty big approximations that happen and gladly allows itself to believe in the fake refractions or reflections.

Jiaya Jia, Jian Sun, Chi-Keung Tang, Heung-Yeung Shum do drag-and-drop pasting, essentially a much smarter lasso tool enabling the pasting of objects between images that respects object boundaries without having to force the user to painstakingly mark them.

Rob Fergus, Barun Singh, Aaron Hertzmann, Sam T. Roweis, William T. Freeman describe a way of removing camera shake from photos. I remember how awed I was when I learned about Wien filtering and how it could remove movement blur, but that required knowledge of how the camera had shook. This method instead figures out a likely blur kernel and then removes it.

Carsten Rother, Lucas Bordeaux, Youssef Hamadi, Andrew Blake have developed an "autocollage" system that takes a set of images, finds representative images that are different, finds the important objects in them (especially taking care with faces), distributes them over a surface and interpolates together a collage.

So far this is enormously complex, requires expertise and is computationally expensive. Almost none of the techniques discussed in the papers look like anything one could put into the hands of a non-expert working in real-time on an ordinary PC. However, that is going to change over the next few years. My prediction is that many of the techniques will become easily packaged elements of standard photo-editing (or embedded camera) software, just as the once upon a time advanced histogram and anti-redeye methods now are everywhere.

De-blurring of images and automatic color harmonisation won't make everybody a photographic artist, but it is sure going to make everything prettier. Smart pasting and composition tools are going to make everybody a potential photo-retoucher or history editor. Of course, it is also going to produce endless visual cliches like overused Photoshop filters and the font fruit salads. The merging of photo collections into 3D databases that enable editing of object appearances or extraction of 3D models of them, as well as moving through "camera space" using online image databases make photo collections into something far more powerful than just images. They actually start to link the real and virtual worlds.

Add the ubiquity of cellphone cameras and other imagers to this. We have already had a democratization of photo thanks to digital cameras and online photosharing. This gets combined with the folksonomies generated by Web 2.0 and geotagging. Suddenly the Great God Internet gets a significantly better visual perceptive system, where every photo starts to add a bit of meaning to the automatic systems in it rather than just for the humans viewing it. And this is even without any advance image recognition that can tell exactly what is in these pictures.

At the same time all this suggests a need to keep track of the providence of images: when we start creating virtual environments based on images, we will need to know how strongly they have been edited and how. So besides the usefulness for intellectual property of adding authorship metadata that cannot easily be removed or lost, we would want such metadata to tell us how changed the image has become compared to the original base reality. Of course, the Great God Internet can likely deal with a bit of false memories and bias just as we do, our own memories are quite constructed anyway.

So my guess, inspired by these papers, is that in the future images are going to be far more dynamic objects than today in about the same way as text has gone from something fixed and stable to something that is endlessly recombined and automatically collated, summarized, analysed and hyperlinked.

A few other papers

Aude Oliva, Antonio Torralba, Philippe G. Schyns have a paper on hybrid images, where they mix the low frequencies of one image with the high of another one, resulting in an image that changes depending on viewer distance. Faces change mood, gender or species, text can become readable only within a certain range and so on. Very fun and simple trick.

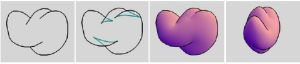

Olga A. Karpenko, John F. Hughes describe a system to create smooth 3D objects from simple line sketches. It is a generalisation of the old work in AI and computer vision on interpreting drawings, now in a differential geometry setting. Still rather limited, but it is getting there.

Also worth mentioning are making panoramas out of series of overlapping photos, rendering rain streaks, force field modelling of crowds and making motions more cartoony.

Posted by Anders3 at October 2, 2006 07:15 PM